Cover Photo by Taylor Vick on Unsplash

Intro

Have you ever found yourself deep in the trenches of Kubernetes networking, only to be surprised by a hidden quirk that challenges your understanding? In this blog post, I unravel the mysteries behind Kubernetes networking in Amazon EKS, shedding light on the intricate journey of a packet from the client through the NLB to an ingress controller pod.

This blog is a story about a change in perception. While I was debugging a problem the other day, it got me to question what I was certain I knew. When you’re sure you understand how Kubernetes works, you encounter another road bump that challenges your understanding and makes you do some research, this article is the output of this research.

The Setup

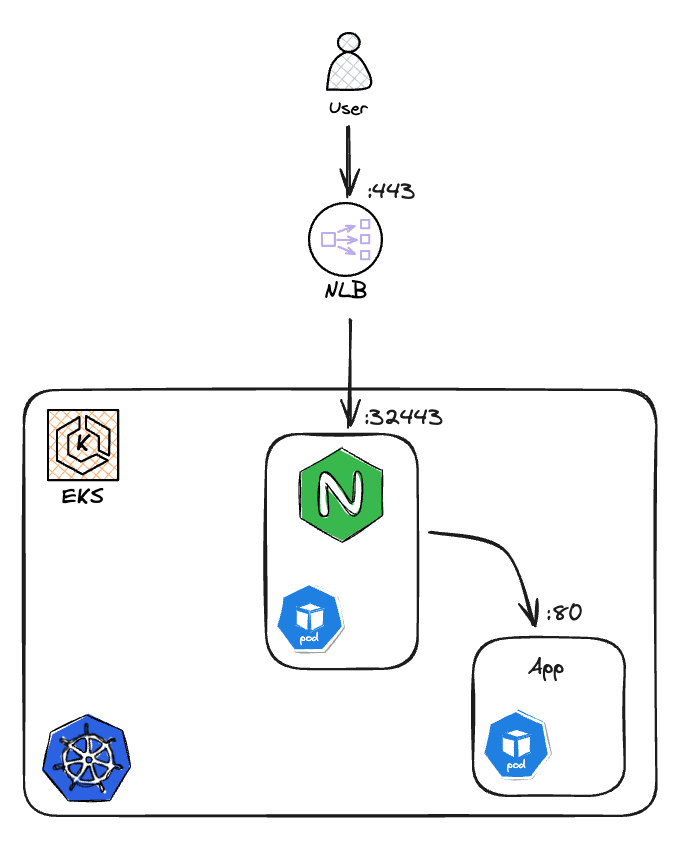

The minimal setup required to examine what’s presented here. You would need an EKS cluster with NGINX ingress-controller deployed with NLB as the entry to your cluster.

The Problem

I was debugging a service with an ingress resource. I had incoming traffic from NLB to my ingress controller. I was surprised to see my ingress controller wasn’t listening on that port. The AWS console shows those endpoints as ‘healthy’, meaning they respond to health check samples. But how is that even possible if there is no process listening on that port?

I gotta say this drove me nuts. I had looked online for similar issues, but nobody mentioned this problem. So I started doing some research, looking for an answer to the question “How does Kubernetes handle Service IP under the hood, on EKS?”

Exploring Kubernetes Networking Magic

Before I present the network flow of a packet, here are some assumptions I take. Kubernetes is a modular platform; this post is relevant for EKS with the VPC-CNI add-on running on v1.27. It was checked with NLB, other load-balancer types might behave differently.

The next part is low-level. You should be familiar with iptables. Two blog posts that cover this topic greatly are — Laymans iptables 101 and the iptables — a comprehensive guide

When a packet arrives, it first goes through the iptables PREROUTING chain. (That’s a builtin one):

[root@...] # iptables -t nat -nvL PREROUTING

Chain PREROUTING (policy ACCEPT 1987 packets, 119K bytes)

pkts bytes target prot opt in out source destination

756M 5G KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

This rule captures every incoming packet and forwards it to the “service portals", the KUBE-SERVICES, (that’s a custom chain) where it’s being matched and designated to the relevant Kubernetes Service IP (nginx, in our case).

Incoming packets on a matching port are DNAT’ed to a Service IP. There’s a matching rule for our nginx instance. Incoming packets on its listening port 32443 are sent to its Service IP:

iptables -t nat -nvL KUBE-SERVICES | grep nginx

0 0 KUBE-SVC-I66WCJWOLI45ORGK tcp -- * * 0.0.0.0/0 172.20.152.36 /* ingress-nginx/ingress-nginx-controller:https-32443 cluster IP */ tcp dpt:32443

Now that our packet has a destination Service IP in the cluster. But service objects in Kubernetes are just a layer of abstraction; there are no actual Pods with such IP. It’s synthetic. Kubernetes networking layer needs to translate this IP to the relevant Pods. This process is done by transforming Service IP to Endpoints. This is also done by iptables.

We have a specific KUBE-SVC-* chain, which is constructed for every service object we have. The purpose of this chain is to translate Service IP to Endpoints (the actual pods behind it). This chain has an entry for every pod alive. This is where the kernel performs ’load balancing’ between the pods.

iptables -t nat -nvL KUBE-SVC-I66WCJWOLI45ORGK

Chain KUBE-SVC-I66WCJWOLI45ORGK (2 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-SEP-TQZ3GYVANOOYIJMO all -- * * 0.0.0.0/0 0.0.0.0/0 /* ingress-nginx/ingress-nginx-controller:https-32443 -> 10.1.21.61:443 */ statistic mode random probability 0.50000000000

0 0 KUBE-SEP-NEOTPBRCKXF3UWGX all -- * * 0.0.0.0/0 0.0.0.0/0 /* ingress-nginx/ingress-nginx-controller:https-32443 -> 10.1.36.6:443 */

We’re not done with iptables just yet. As you can see from the output, there’s another chain our packet has to go through. We’re getting close. Each chain corresponds to a Pod, with a KUBE-SEP-* chain. If we check the rules of this chain:

iptables -t nat -nvL KUBE-SEP-TQZ3GYVANOOYIJMO

Chain KUBE-SEP-TQZ3GYVANOOYIJMO (2 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-MARK-MASQ all -- * * 10.1.21.61 0.0.0.0/0 /* ingress-nginx/ingress-nginx-controller:https-32443 */

64 3840 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* ingress-nginx/ingress-nginx-controller:https-32443 */ tcp to:10.1.21.61:443

The first rule is for outgoing packets (SNAT). Incoming traffic matches the second rule. This rule has a DNAT target, which is Destination Network Address Translation. This rule rewrite the TCP packet destination IP to the Pod’s IP: 10.1.21.61:443 Now routing continues as normal, reaching the Pod on the relevant port.

Summary

When a packet arrives, it first goes through iptables PREROUTING, where it’s forwarded to the KUBE-SERVICES chain. This chain directs packets to the relevant Kubernetes Service IP. From there, iptables translates the Service IP to Endpoints using the KUBE-SVC- chains, ultimately reaching the correct pod.

In conclusion, understanding Kubernetes networking in EKS requires delving into iptables manipulations and Kubernetes Service abstractions. I hope this post sheds light on these concepts.