Hello folks,

It has been a long time since we talked about performance in Go.

There is no secret that Go has support for a large amount of template syntax nowadays. However, we've seen zero articles on how each of them performs. Here we are.

Intro

In this article we will run simple, easy to understand, mini benchmarks to find out which template parser renders faster. Keep in mind that speed is not always the case, you have to choose wisely the template parser to use because their features varies and depending your application's needs you may be forced to use an under-performant parser instead.

We will compare the following 8 template engines/parsers:

Each benchmark test consists of a template + layout + partial + template data (map). They all have the same response amount of total bytes. Amber, Ace and Pug parsers minifies the template before render, therefore, all other template file's contents are minified too (no new lines and spaces between html blocks).

As always, my benchmark code is available for everyone but I will not tire you anymore by showing the code for each of them in the article, instead you can navigate here.

Benchmarks

| Processor | Intel(R) Core(TM) i7-8750H CPU @ 2.20GHz |

| RAM | 15.85 GB |

| OS | Microsoft Windows 10 Pro |

| Bombardier | v1.2.4 |

| Go | go1.15.2 |

Terminology

Name is the name of the template engine.

Reqs/sec is the avg number of total requests could be processed per second (the higher the better).

Latency is the amount of time it takes from when a request is made by the client to the time it takes for the response to get back to that client (the smaller the better).

Throughput is the rate of production or the rate at which data are transferred (the higher the better, it depends from response length (body + headers).

Time To Complete is the total time (in seconds) the test completed (the smaller the better).

Results

📖 Fires 1000000 requests with 125 concurrent clients. It receives HTML response. The server handler sets some template data and renders a template file which consists of a layout and a partial footer.

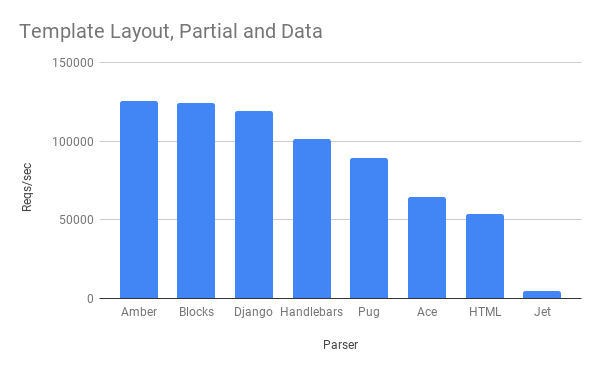

| Name | Language | Reqs/sec | Latency | Throughput | Time To Complete |

|---|---|---|---|---|---|

| Amber | Go | 125698 | 0.99ms | 44.67MB | 7.96s |

| Blocks | Go | 123974 | 1.01ms | 43.99MB | 8.07s |

| Django | Go | 118831 | 1.05ms | 42.17MB | 8.41s |

| Handlebars | Go | 101214 | 1.23ms | 35.91MB | 9.88s |

| Pug | Go | 89002 | 1.40ms | 31.81MB | 11.24s |

| Ace | Go | 64782 | 1.93ms | 22.98MB | 15.44s |

| HTML | Go | 53918 | 2.32ms | 19.13MB | 18.55s |

| Jet | Go | 4829 | 25.88ms | 1.71MB | 207.07s |

And for those who like graphs, here you are:

As we've seen above, Amber is the fastest and Jet is the slowest one. Keep in mind that in Jet parser, we cache the templates by ourselves before server ran - its default behavior is to cache them at runtime instead, so it could be even slower natively.

If you want to run the benchmarks by yourself, please follow the guide below:

- Install the Go Programming Language

- Download and unzip the test files using git clone

- Open a terminal inside the test files directory and execute the commands below:

$ go get -u github.com/kataras/server-benchmarks

$ go get -u github.com/codesenberg/bombardier

$ server-benchmarks --wait-run=3s -o ./results

Have fun 🥳 and keep yourself strong in these difficult and anxious times we live as a society💪

Yours,

Gerasimos Maropoulos. Author of the Iris Web Framework