Introduction

In this article, we will discuss designing a one-to-one heartfelt relationship between a node and a Kubernetes object (pod/deployment). This article at its core is centered on intentional pod scheduling with Kubernetes with a focus on Node affinity. In this piece of art, we will explore the definition of Node Affinity, the reason we instruct certain nodes to be deployed on certain pods, why this form of pod scheduling is preferred, and finally how to set up Node Affinity for a set of nodes and some pods. To follow this body of work it is important you understand the basic concepts of Kubernetes, you have a cluster up and running and you genuinely have your heart open to a love story.

A Love Story

In this love story, we have two friends called Claire and Fiona. Claire and Fiona are looking to get married but they have specific requirements for a suitor. They both insist on marrying tall men while Fiona would like a tall man who has a beard (however she is not adamant about it). Both friends would rather not get married if they don't find tall men, However in a surplus of tall men, Fiona would like a man with a beard. Our task in this article is to design "Men" for both Claire and Fiona.

Understanding Node affinity

To understand Node affinity assume the two friends are pods and the Suitors (Men) are nodes. Some pods have Hard requirements (tall men) these requirements are compulsory and an absence of them would leave the pod unscheduled, while some pods have Soft requirements (bearded men), these requirements are optional and they just streamline the process of finding a suitable node.

We deliberately place such requirements on pods to ensure that those pods are deployed on certain nodes, for example, pods that require a lot of compute/memory resources should be deployed on nodes with heavy compute/memory resources. The primary reason for such a design is for deliberate pod scheduling and to override the default scheduling mechanism of the kube-scheduler. We mask the properties of these nodes by using labels and then we design the pods to look for these labels. Let's move on to designing nodes that will be suitable for both Claira and Fiona.

Finding a Node for Claire

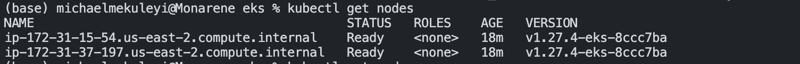

For this article, it is assumed that we have two fresh nodes running. On the terminal, run the following command, to view the nodes:

michael@monarene:~$ kubectl get nodes

Notice that we have two distinct nodes, one ending with 54 on the first sub-string of the node name and another ending in 197 on the first substring of the node name.

To deploy the claire pod, save the following configuration into claire.yaml.

apiVersion: v1

kind: Pod

metadata:

name: claire

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: height

operator: In

values:

- tall

containers:

- name: with-node-affinity

image: k8s.gcr.io/pause:2.0

Take a keen look at spec.affinity.nodeAffinity , you will find a field called requiredDuringSchedulingIgnoreDuringExecution, requiredDuringScheduling means that for the pod to be assigned a node, it is a must that the expressions on the pod match the requirements. In this case the requirement is that one of the labels of the pod must be height=tall . IgnoreDuringExecution means that after scheduling on the node, ignore further changes to the labels on the nodes, in simpler terms even if the labels on the node change to height=short the pod should still continue to be assigned to the node.

To start the deployment, run the following command on the terminal.

michael@monarene:~$ kubectl apply -f claire.yaml

After a few seconds, check the state of the pod by running the following command,

michael@monarene:~$ kubectl get pods

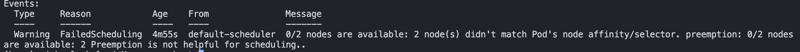

We see that claire is stuck in a pending phase, this is the case because none of the nodes have the label height=tall Hence claire will not be scheduled on any of the nodes as this is a Hard requirement for scheduling.

To further verify that this is the case, run the following command,

michael@monarene:~$ kubectl describe pods claire

To fix this, assign the node that ends with 54 the label height=tall , we do this by running the following command:

michael@monarene:~$ kubectl label nodes ip-172-31-15-54.us-east-2.compute.internal height=tall

Now let us check the status of the pod by running the following command:

michael@monarene:~$ kubectl get pods

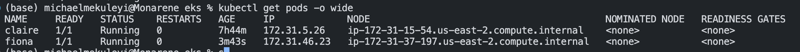

We can now see that the pod is running as expected, And claire has been paired with a Suitor 🥰. To further show that claire was scheduled to the node ending with 54, run the following command:

michael@monarene:~$ kubectl describe pods claire

Finding a Node for Fiona

To setup the pod definition for Fiona, save the following configuration into fiona.yaml

apiVersion: v1

kind: Pod

metadata:

name: fiona

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: height

operator: In

values:

- tall

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: beard

operator: In

values:

- present

containers:

- name: with-node-affinity

image: k8s.gcr.io/pause:2.0

As per the configuration, you can see that Fiona requires that her man be tall, but amongst tall men, she would also like a bearded man. This is illustrated in the preferredDuringScheduling field , this requirement is what we referred to as a Soft requirement. To further illustrate this, we will assign the node that ends with 197 with the labels height=tall and beard=present . We can do this by running the following command:

michael@monarene:~$ kubectl label nodes ip-172-31-37-197.us-east-2.compute.internal height=tall && kubectl label nodes ip-172-31-37-197.us-east-2.compute.internal beard=present

Next, we deploy fiona and see what node she moves to, We do this by running the following command:

michael@monarene:~$ kubectt apply -f fiona.yaml

Next, we check to see if she is running as expected,

We can see that Fiona is running and has been successfully scheduled to a pod. Now to check the pod she has been scheduled to we run the following command:

michael@monarene:~$ kubectl get pods -o wide

We see that Fiona has been scheduled on the appropriate node, the Suitor that is tall and also has beards, and this is how we schedule pods to suitable nodes.

Conclusion

In this article we used an illustration of a courting process to describe the intentional pod scheduling using Node Affinity, We configured two nodes with appropriate labels, we deployed our pods to their respective suitors (Nodes) and finally, we showed how to assign labels to nodes and pods to labels. Feel free to like, share, and comment on this article. Don't forget to subscribe to receive notifications when I post new articles. Thank you!