I made the same web app in Gatsby and Next.js and found Gatsby performed better

With the ongoing Covid-19 pandemic and social distancing measures, many events have been forced to migrate to online virtual events. I’m a software engineer at Antler, which runs a global startup generator program that usually runs multiple in-person Demo Day events a year that showcase around a dozen new startups, and we faced the same situation.

We wanted to deliver a solid online experience that puts the focus on the content — our portfolio companies’ pitches. With the wider audience of this event and the fact that it may be a user’s first exposure to Antler’s online presence, we needed to put our best foot forward and ensure it loads fast. This was a great case for a highly-performant progressive web app (PWA).

TL;DR

Displaying a skeleton while the data loaded made the app seem faster than just a blank page while the server loaded the data.

Gatsby’s static output was only slightly faster than Next.js, but Gatsby’s plugins and documentation made for a better developer experience.

Server-side rendering or static site generation?

For some background: all of our web products are built with React and the Material-UI library, so we stuck with that stack to keep development fast and ensure the new code is compatible with our other projects. The key difference is all our other React apps were bootstrapped with create-react-app and are rendered entirely on the client side (CSR), so users would be faced with a blank white screen while the initial JavaScript is parsed and executed.

Because we wanted top-notch performance, we were looking into leveraging either server-side rendering (SSR) or static site generation (SSG) to improve this initial load experience.

Our data will be sourced from Cloud Firestore via Algolia to have more granular, field-level control over public data access with restricted API keys. This also improves query performance: anecdotally, Algolia queries are faster and the Firestore JavaScript SDK is 86 KB gzipped compared to Algolia’s, which is 7.5 KB.

We also wanted to make sure the data we serve is as fresh as possible in case any errors are published live. While the standard SSG practice is to perform these data queries at compile time, we expected frequent writes to our database from both our admin-facing interface, firetable, and our web portal for founders, causing multiple builds to run concurrently. Plus, our database structure may cause irrelevant updates to trigger new builds, making our CI/CD pipeline incredibly inefficient, so we needed the data to be queried whenever a user requests the page. Unfortunately, this means it could not be a “pure” SSG web app.

Initially, the app was built with Gatsby since we had already been maintaining landing pages built in Gatsby and one of them was already bootstrapped with Material-UI. This initial version produced a page that initially displays a skeleton while the data was being loaded and achieved a first contentful paint time of around 1 second. 🎉

But since the data was being loaded on the client side:

Users would have to wait after the initial page load to view the actual content and wait for four network requests to Algolia to finish.

There’s more work for the browser’s JavaScript engine as React needs to switch out the skeletons for the content. That’s extra DOM manipulation!

Search engine crawlers might not be able to load the content and they generally prefer static sites.

So over a public holiday long weekend, I decided to experiment with a server-rendered version with Next.js. Lucky for me, Material-UI already had an example project for Next.js so I didn’t have to learn the framework from the start — I just had to look through specific parts of the tutorial and documentation. Converting the app and querying the data on the server side on each request solved all three points I raised above and the end result was…

Roughly triple the time for the first contentful paint.

Plus, the Lighthouse speed index quadrupled and the time to first byte rose from 10–20 ms to 2.56 seconds.

While it is noteworthy that the Next.js version is hosted on a different service (ZEIT Now vs Firebase Hosting — this may have also contributed to the higher TTFB), it was clear that pushing the data fetching step to the server produced a seemingly slower result, even if the content loaded at around the same time, because the user only sees a blank white page.

This highlights an important lesson in front-end development: give your users visual feedback. A study found that apps that used skeleton screens are perceived to load faster.

The results also go against a sentiment you might have noticed if you’ve been reading articles about web development for the past few years:

The client side isn’t evil.

SSR is not the catch-all solution to performance issues.

Gatsby vs Next.js: static site generation performance

While the two frameworks have been known exclusively for static site generation and server-side rendered apps respectively, Next.js 9.3 overhauled its SSR implementation to rival Gatsby.

At the time of writing, this update was just over a month old and was still featured on the main Next.js landing page and there weren’t many — if any — comparisons of the frameworks’ SSG implementations. So I decided to run an experiment myself.

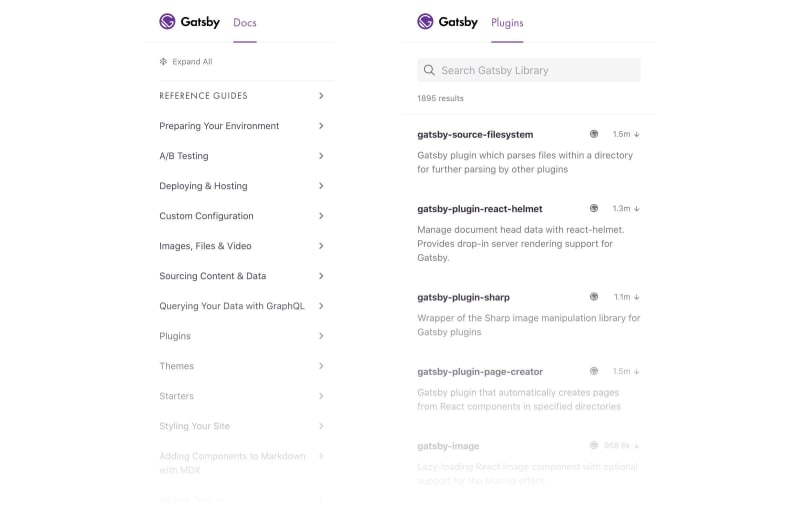

I reverted the changes made to the Gatsby version back to client-side data fetching and made sure both versions had the exact same feature set: I had to disable the SEO features, favicon generation, and PWA manifest, which were handled by Gatsby plugins. To compare just the JavaScript bundles produced by the frameworks, there were no images or other content loaded from external sources and both versions were deployed to Firebase Hosting. For reference, the two versions were built on Gatsby 2.20.9 and Next.js 9.3.4.

I ran Lighthouse six times for each version on my local machine.

The results very slightly favour Gatsby:

The Next.js version was just slightly behind Gatsby in the overall performance score, first contentful paint, and speed index. It also registered a higher max potential first input delay.

Diving into the Chrome DevTools Network panel to find an answer, the Next.js version split the JavaScript payload into three more chunks (ignoring the manifest files generated), but resulted in a 20 KB smaller compressed payload. Could these extra requests have outweighed the gains made by the smaller bundle size so much that they hurt performance?

Looking into JavaScript performance, DevTools show the Next.js version took 300 ms longer to achieve first paint and spent a long time evaluating the runtime scripts. DevTools even flagged it as a “long task”.

I compared the two branches of the project to see if there were any implementation differences that could have caused the performance hit. Other than removing unused code and fixing missing TypeScript types, the only change was the implementation of smooth scrolling when navigating to specific parts of the page. This was previously in the gatsby-browser.js file and was moved to a dynamically-imported component so it would only ever be run in the browser. (The npm package we’re using, smooth-scroll, requires the window object at the time it’s imported.) This may very well be the culprit, but I’m just not familiar with how Next.js handles this feature.

Gatsby has a superior developer experience

Ultimately, I decided to stick with the Gatsby version. Disregarding the very minor performance benefits over SSG Next.js (am I really going to nitpick over a 0.6 second difference?), the Gatsby version had more PWA features already implemented and it would not have been worth the time to re-implement it.

When initially building the Gatsby version, I was able to quickly add the final touches to make a more complete PWA experience. To implement page-specific SEO meta tags, I just had to read their guide. To add a PWA manifest, I just had to use their plugin. And to properly implement favicons that support all the different platforms, which remains a convoluted mess to this day, well, that’s already a part of the manifest plugin I just installed. Huzzah!

Implementing those features in the Next.js version would have required more work Googling tutorials and best practices and would not have provided any benefit, especially since the Next.js version did not improve performance anyway. This was also the reason I decided to just disable these features when comparing against the Gatsby version. While the Next.js documentation is more succinct (likely since it’s leaner than Gatsby) and I really like their gamified tutorial page, Gatsby’s more expansive documentation and guides provided more value in actually building a PWA, even if it looks overwhelming at first.

There is a lot to be appreciated about Next.js, though:

Its learning curve feels smaller thanks to its tutorial and shorter documentation.

Its primary data fetching architecture revolves around

asyncfunctions andfetch, so you don’t feel like you need to learn GraphQL to fully utilise the framework.It has TypeScript support out of the box, while Gatsby requires a separate plugin and it doesn’t even do type checking — that requires its own plugin. (When converting the app to Next.js, this caused some issues as I didn’t even realise I had incorrect types, causing the compile to fail.)

With its overhauled SSG support, Next.js has become a powerful framework to easily choose between SSR, SSG, and CSR on a page-by-page basis.

In fact, had I been able to fully statically generate this app, Next.js would be a better fit since I’d be able to use Algolia’s default JavaScript API and keep the data fetching code in the same file alongside the component. As Algolia does not have a built-in GraphQL API and there is no Gatsby source plugin for Algolia, implementing this in Gatsby would require adding this code to a new file and goes against the more intuitive declarative way of specifying pages.

There are always more performance improvements

With that out of the way, there were even more performance improvements to be made to get ever closer to that 100 performance score in Lighthouse.

Algolia’s March 2020 newsletter recommended adding a

preconnecthint to further improve query performance. (Unfortunately, the email had the wrong code snippet; here’s the correct one.)Static files should be cached forever. These include the JS and CSS files generated by Gatsby’s webpack config. Gatsby has a great documentation page on this and even has plugins to generate the files for Netlify and Amazon S3. Unfortunately, we have to write our own for Firebase Hosting.

The images we were serving are all JPEGs or PNGs uploaded by our founders and aren’t compressed or optimised. Improving this would require more complicated work and is beyond the scope of this project. Also: it would be really nice to just convert all these images to WebP and store just one very efficient image format. Unfortunately, as with many PWA features, the Safari WebKit team continues dragging their feet on this and it is now the only major browser without WebP support.

Thanks for reading! Normally, I would post a link to view the final project but due to legal reasons, it cannot be shared publicly.

You can follow me on Twitter @nots_dney to get updates as I’ll be writing and sharing more about my experiences as a front-end engineer.