The globe has been transitioning to a new technology generation. In addition, the applications that are being developed must meet the generation's standards.

According to the census of 2022, the global population is estimated to be around 7.9 billion people. Therefore, while building applications, accessibility, and ease of use are significant aspects that are taken into consideration.

For this tutorial, we will enhance the standards of our generic React-based Web application by integrating Alan AI voice SDK to provide voice accessibility to our application.

Before we go any further, let's get a fundamental knowledge of Alan AI.

Alan AI is an artificial intelligence technology that helps app developers add voice assistant functionality to their existing apps.

It's a really simple tool to incorporate, and anyone with a basic understanding of JavaScript may do it. Alan AI integration is available to everyone for free.

Let's begin by building a react application with the help of the basic npm package create-react-app.

Prerequisites** Node must be installed in your system

Open your project folder's terminal or command prompt and type the following command:

npx create-react-app alan-ai-react-app

Type y if prompted to proceed.

It will take 3-4 minutes to install all the dependencies.

On completion, a folder named alan-ai-react-app will be created.

Open the project in a code editor (suggestion VSCode).

The structure would be as shown in the above image.

- alan-ai-app/

- public/

- index.html

- manifest.json

- node_modules/

- src/

- index.js

- index.css

- app.js

- app.css

- package.json

- package-lock.json

Now, open terminal by going to Terminal section > New Terminal

A terminal will be opened below the code editor, type:

npm start

The browser will be opened automatically, and the application will be started at port 3000 by default.

Go back to the terminal, and type:

npm install @alan-ai/alan-sdk-web

This will install the Alan AI sdk into your application.

Go to the package.json file in the root folder to check whether @alan-ai/alan-sdk-web is present in the dependencies.

Go to app.js file in the src folder, and replace the code with:

import React from "react";

import alanBtn from "@alan-ai/alan-sdk-web";

import "./App.css";

const App = () => {

const alanAIRef = React.useRef(null);

const [orderList, setOrderList] = React.useState([]);

React.useEffect(() => {

alanAIRef.current = alanBtn({

key: "API_KEY",

});

}, []);

return (

<div className="app">

<h1>Demo Restaurant</h1>

<p>

Place Order just by clicking on Alan AI voice button

<br />

And say "Pizza", "Burger", or any items

</p>

<div className="order-container">

{orderList && orderList.length > 0 ? (

<>

<p>Your Order for</p>

<ul className="order-list">

{orderList.map((order, index) => (

<li className="order-list-item" key={index}>

{order}

</li>

))}

</ul>

<label>have been placed</label>

</>

) : (

"No order Placed Yet"

)}

</div>

</div>

);

};

export default App;

And app.css code with:

.app {

text-align: center;

}

.order-container {

margin-top: 2rem;

}

.order-container > p {

font-weight: 500;

}

.order-list {

padding: 0;

}

.order-list-item {

list-style-type: none;

box-sizing: border-box;

padding: 1rem;

margin: 0 1rem;

display: inline-block;

box-shadow: rgba(0, 0, 0, 0.16) 0px 3px 6px, rgba(0, 0, 0, 0.23) 0px 3px 6px;

}

Now, we have completed the UI of our application, and integrated the Alan AI button.

It’s the time to add some functionality to it, when we say any item to the voice assistant, it should add it in our order list and that should be displayed on the screen.

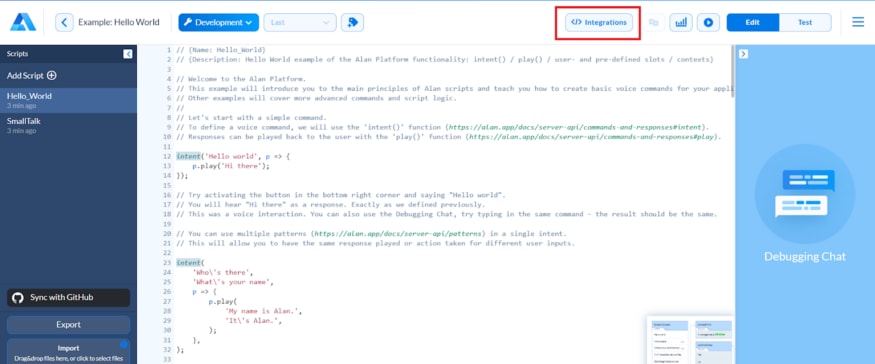

Go to studio.alan.app and create an account to get some free integrations.

After signing in to the application, the project page will be displayed. Select Create Voice assistant > Example: Hello World

This will create a voice assistant with some pre-written commands.

To integrate the assistant, click on the Integration button at the top.

And click on the copy SDK key and replace “API_KEY” in the app.js in your react application.

A voice assistant button will be available in your react application on the browser.

Click on it and say “Hello!”, “How are you?”. It will give some reply according to the commands written in the Alan Studio platform.

Go back to Alan Studio web app, and replace the HelloWorld file code:

const helloPatterns = [

'Help',

'I need some help',

'I am stuck',

'Please guide me',

'Hello assistant',

];

intent(helloPatterns, p => {

p.play('Hey, I am your food assistant. You can give me your order. What had you like to eat?');

});

intent(`$(COMPLAINT* (.*))`, p => {

p.play(`$(COMPLAINT*|food (.*)) is being added`);

p.play({command: "add", item: p.COMPLAINT.value});

});

const clearPatterns = [

'Clear',

'checkout',

'I am done',

'done',

'finish',

];

intent(clearPatterns, p => {

p.play(`Cart have been cleared`);

p.play({command: "clear"});

});

And back in app.js, replace the use effect code with:

React.useEffect(() => {

alanAIRef.current = alanBtn({

key: "API_KEY",

onCommand: function (commandData) {

switch (commandData.command) {

case "add": {

const { item } = commandData;

setOrderList((orLst) => {

return [...orLst, item];

});

break;

}

case "clear": {

setOrderList([]);

break;

}

default:

break;

}

},

});

}, []);

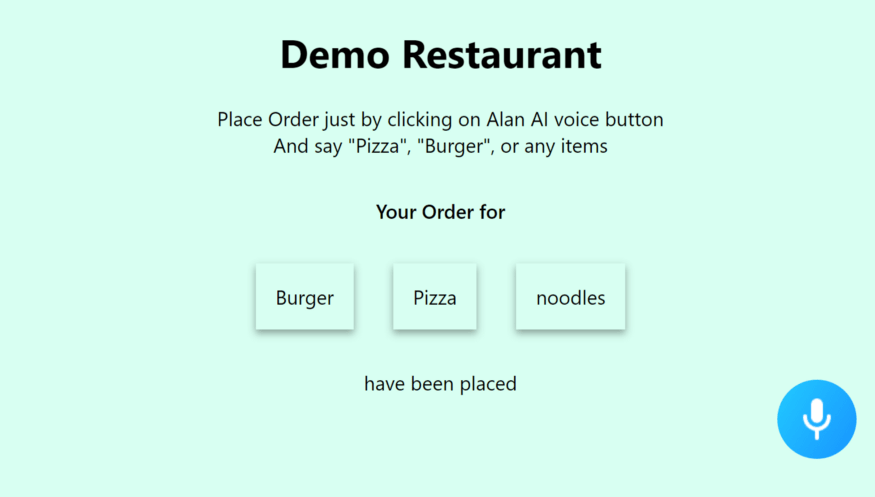

Save the code, and it’s the time to test out the implementation,

Go to the react application on the browser, and click on the voice assistant button, and say “Hello!”, continuing with the food names - “Pizza”, “Burger”, “Noodles”, etc…

It will look something like:

After Addition, try to say “Clear”, “Checkout”, etc… to clear up the cart.

This is how you can integrate the Alan AI integration to enable your react application with voice commands.

The demo application is available on: https://alan-ai-react-integration.netlify.app

The code is Open-Sourced at: https://github.com/raghavdhingra/Alan-AI-React-integration

Join the Alan AI community at https://bit.ly/alan-slack