Why do we keep doing whiteboard interviews, even though they tell us little about how good the applicant is at actual software development?00:37 AM - 20 Mar 2015

Amy Hendrix@sabreuse

Amy Hendrix@sabreuse @sarahmei tech interviews depend a lot on hazing mentality: we went through this, so we have to make them go through it toi00:48 AM - 20 Mar 2015

@sarahmei tech interviews depend a lot on hazing mentality: we went through this, so we have to make them go through it toi00:48 AM - 20 Mar 2015

Technical interviews in technology have come a long way for such a young industry. Candidates from 10 or so years ago were famously given brain teasers at companies like Microsoft, like “Why are manholes round?” When these questions were demonstrated to reveal little about the caliber of candidates, the practice was discontinued. Later on, companies peppered interviewees with trivia, including pages of javascript questions and random factoids about various languages. For the most part, companies today are focusing more and more on interviews that are platform and language agnostic -- intended to show only the problem solving ability of a particular person. However, this process still has its flaws.

Most engineers will admit that many good candidates can be missed by a traditional technical interview. Candidates that are out of practice, on an off day, or even (god forbid!) never really understood graph theory might do poorly in an interview or two and the company that rejected them misses out on a great candidate. However, many people believe that losing some of these “good” candidates is OK as long as they don’t accidentally hire “bad” engineers along the way.

In the words of Stack Overflow cofounder Joel Spolsky “if you reject a good candidate, I mean, I guess in some existential sense an injustice has been done, but, hey, if they’re so smart, don’t worry, they’ll get lots of good job offers.”

But is that mindset enough to uphold the interview status quo? According to this piece in Tech Crunch: “Historically, a false positive has been perceived as the disaster scenario; hiring one bad engineer was viewed as worse failing to hire two good ones. But good engineers are so scarce these days, that no longer applies.” No one wants to hire a bad candidate. But companies pour millions of dollars into recruiting costs in the process of avoiding one. Let’s look at the pricetag for one candidate. There are the obvious costs: like travel expenses for onsite candidates, but recruiting processes have lots of additional costs as well. According to an estimate from Recruiter Box:

- Posting on job boards can cost between $40-500 depending on the method

- Reviewing applicants takes from 10-24 hours costing companies $500+

- Prescreening processes take 2-4 hours, which is easily $100-200

- Interview preparations by recruiters take 1-2 hours, costing $40

- Onsite interviews typically take 4-5 hours of developer time, $200-400

- The wrap-up process, including making offers, talking to candidates and checking references can take around 8 hours, ~$200

The grand total for this estimate is: $1080-1840 without travel costs.

And very rarely will the first person to get through the process accept an offer. According to an analysis by Glassdoor, companies spend an average of ~35 days interviewing 120 candidates for a single engineering position. Of this number, 23 make it to a screen, 5.8 are brought onsite, and 1.7 are given offers. So you’re looking at $40-500 + $500 + $100-200 + $920 ($40*23) + $1160-2320 ($200-400*5.8) + 340 ($200*1.7) = $3060-4780.

This cost is on the conservative side, but it pales in comparison to the costs of making a bad hire. A bad call can cost teams tens of thousands of dollars in costly mistakes and correction time. So what are companies to do?

Does interviewing even work?

Studies show that the best possible way to evaluate candidates is through a “work sample test” meant to reflect the kind of work the candidate will be doing. This is why technical interviews rose to popularity over conventional “behavioral” interviews. A psychology study by the University of Toledo in 2000 found that judgements made within 10 seconds of an interview consistently predicted the overall outcome. By doing whiteboarding or coding interviews, we seek to eliminate that bias by judging performance against a (more) objective problem.

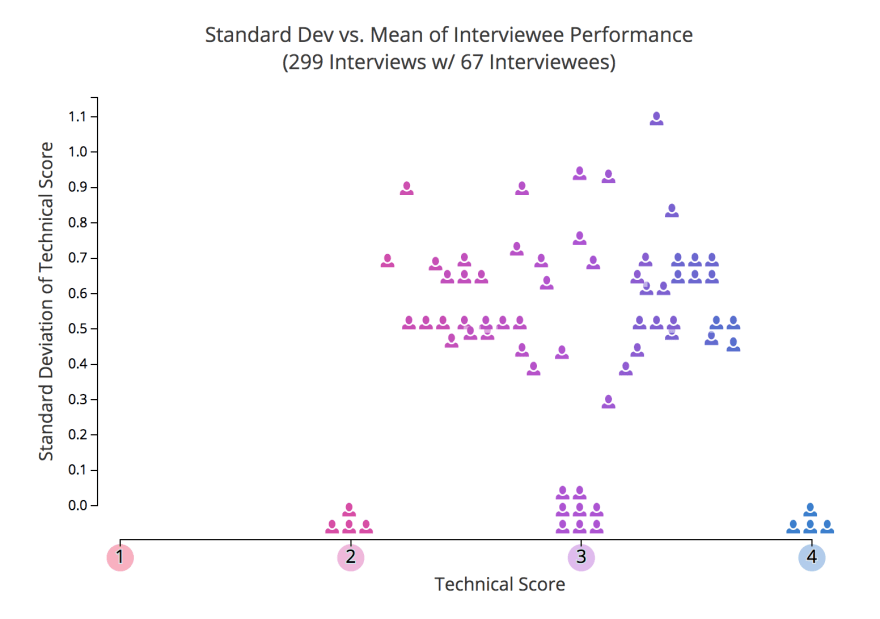

However, according to a study by interviewing.io, which is a platform for practicing technical interviews, performance in these interviews does not necessarily correlate strongly to job performance.

Technical interviewing performance source

The site found that performance varied widely, with just about 25% of candidates performing consistently at the same level. Even the “strong” performers, did poorly 22% of the time. The more concerning part of these revelations is the consistency. Some “good” candidates are bound to slip up now and again, but that’s ok as long as “poor” performers are not making it through the process. But if only a quarter of the interviewees are consistent, what does that say about the process? It’s certainly got room for improvement.

Part of the problem with technical interviews is the focus on one particular skill set. Most interview processes take it for granted that the goal is to hire the most qualified person--which most of us take to mean “smartest.” The problem is that this isn’t necessarily the best predictor of success. A study from the NYT a few years ago documented a series of studies showing that teams that were dominated by a few very “intelligent” engineers, by the standard of IQ tests, did consistently less well on all metrics than those teams that were A. More collaborative, B. Ranked higher on the ability to read emotional intelligence, and C. Had more diversity. None of these factors are screened in a traditional coding test. Although we ask questions to gauge cultural fit and collaboration, these factors are often an afterthought compared to performance on the technical portion of the interview.

Alternatives to whiteboard interviews

There are other technical methods of assessing candidates, but none are being adopted at scale in the technology industry. Some companies give candidates a technical project to work on, at least in lieu of a tech screen. The problem is that it can be difficult to come up with a project that is small enough to be feasible to complete and complex enough to reflect a normal day as a software engineer. Many companies also fear that these tests are often plagiarized by aspiring jobseekers. In addition, many candidates feel they should be paid for their efforts to complete these projects, some of which have even been used by the company post-interview!

Some companies like Stripe, use a different flavor of the typical technical interview. Stripe allows candidates to use their own laptops and look up syntax on sites like stack overflow in an effort to more closely mimic the conditions of the job they are seeking. In addition to technical questions, they incorporate interviews focused on a candidate’s ability to explain a technical project they worked on and answer basic engineering design questions.

Amazon has experimented with group interviews, where candidates are allowed to select a technical problem (from a given set) and work on it over the course of several hours, before being given the chance to explain their approach.

At Helpful they conduct simple 1 hour interviews and give offers to all candidates deemed worthy. Then they put those candidates on a probationary period (30-60 days) to see how they do on the job. This approach presents its own challenges since many engineers may find such a process demeaning, but it is an interesting spin on traditional interview processes.

These companies have written about their new processes and the kinds of results they are getting and it looks promising! If the technology industry values innovation so highly, what better place to practice this principle than within our recruiting systems?

Where do we go from here?

While many of the alternative interviewing strategies mentioned above are promising, there are deeper rooted problems with technical interviews. Egos run amok as software engineers who have great interviewing confidence are harsh on less experienced candidates, who may be great engineers that lack practice or may be prone to nervousness.

For some reason, the idea that it is necessary to hire “the best of the best” has spread quickly throughout the technology industry. When I was in college, I met with recruiting managers to discuss their strategies for hiring as a part of my role in a student group. Most of these recruiters echoed this need to find only the absolutely best candidates. While this attitude has a place at the executive level, when I was in school I was confident that at least 70% of the engineering students would be able to fulfill the job requirements these companies were seeking. Even if you require above average engineering skill, conservatively 35% of these students would be able to perform well at the jobs you are offering. Yet, they still discussed wanting to hire only the top 1-5%.

Elitest penguins source

The elitist need to hire the “top 5%” of engineers and the accompanying elitist belief that those who have made it through the process are part of the “top 5%” do not account for the random elements of interviewing. (This mindset is correlated to the idea that tech workers are at the top of the food chain because of their technical skills. This “skills gap” theory has its own critics.) The problem with looking for the top is that as shown by interviewing.io even top performers are bound to mess up 22% of the time! While we would like to believe that interviewing is objective, other studies suggest that elements of this process are out of our control--like our ability to form a connection with the interviewer within the first 10 seconds.

The problem isn’t just the interviewing stage of the pipeline. Many recruiters filter out good candidates early on on dubious criteria. According to Triplebyte, which specializes in filtering for good candidates that would be a match for Ycombinator startups, candidates that do well in the hiring process are those that reflect the “backgrounds of the founders.” Even non-technical recruiters will reject 50% of applicants by pattern matching against these criteria at most of these companies. And because founders tend to lack diversity to a severe degree (just 3% of VC funding goes to female founders and only 1% goes to black founders) this is a big roadblock to improving diversity in technology.

Where do we go from here? It’s impossible to arrive at a better solution without iterating on new ideas. Start by experimenting slightly with traditional technical interview formats and run the numbers--how do hired candidates in one process compare to hired candidates in another? It is well known that companies like Amazon rely heavily on this data to refine their interviewing process from year to year. And the growing prevalence of Amazon’s group interview format suggests that this new method has proven successful.

Aside from systematic changes, as engineers who interview it is important to try and make candidates comfortable, to be aware of our personal biases, and to let go of the belief that we can only work with the 1% smartest possible engineers. These beliefs are limiting and fundamentally wrong. And as we know, there are more than enough software engineering jobs to go around.

Plenty of coding to go around source

Share what has worked for your company in the comments!