1. Preface

At WWDC 2023, Apple introduced the mixed reality device Apple Vision Pro, alongside the spatial computing platform visionOS. As Apple CEO Tim Cook stated: 'The Mac brought us into the personal computing era, the iPhone into the mobile computing era, and the Vision Pro will lead us into the spatial computing era.' This article will explain the Apple Vision Pro, VisionOS, VisionOS development, and its application scenarios. VisionOS development drafts can utilize Codia AI Design, which can effortlessly transform screenshots into editable Figma UI designs. For code, Codia AI Code can be used, as it supports converting Figma designs to SwiftUI Code.

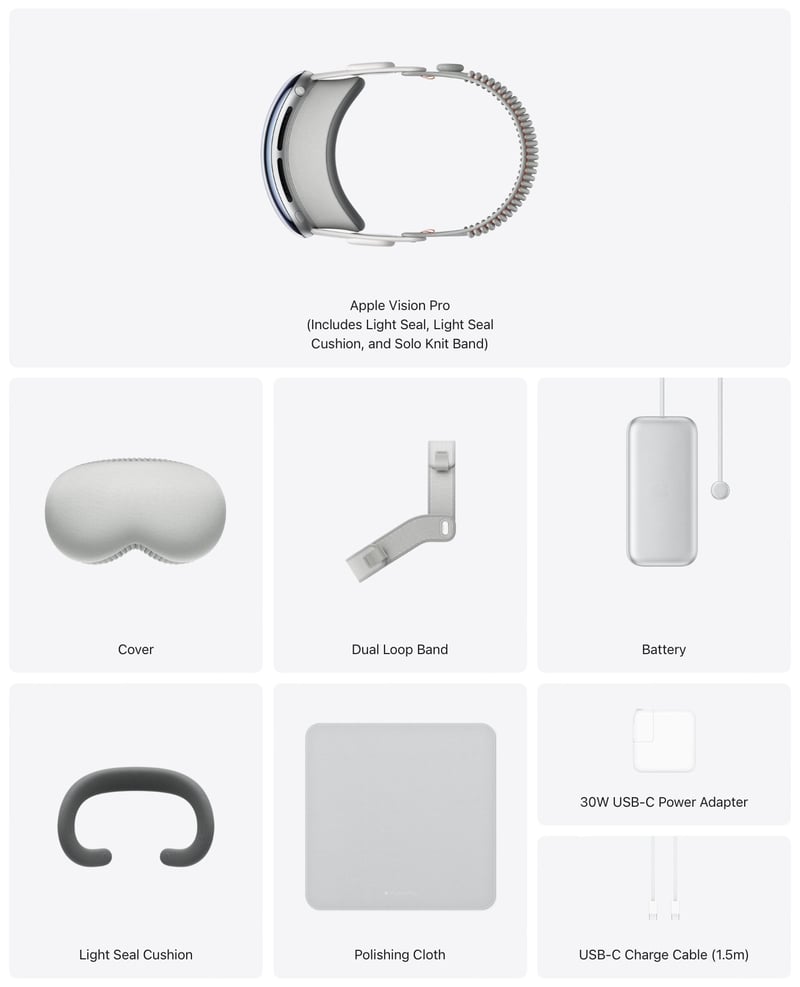

2. Vision Pro

At 8 a.m. local time on January 19th, Apple began the pre-sale of Apple Vision Pro in the United States. As with the launch of any new Apple product, the first batch was quickly sold out. Hours later, the estimated delivery time had moved to mid-March.

2.1. Vision Pro Introduction

Apple Vision Pro is a facial wearable computer, which Apple claims will offer users a unique mixed-reality experience. This experience combines virtual reality and augmented reality technologies, seamlessly integrating with Apple's consistent high quality and ecosystem capabilities. It connects smoothly with commonly used applications and services such as FaceTime and Disney+. Compared to other products on the market, Vision Pro's uniqueness lies in its more intelligent interaction and extensive perception of the real world. It aims to provide users with an immersive experience while maintaining connectivity with the real world.

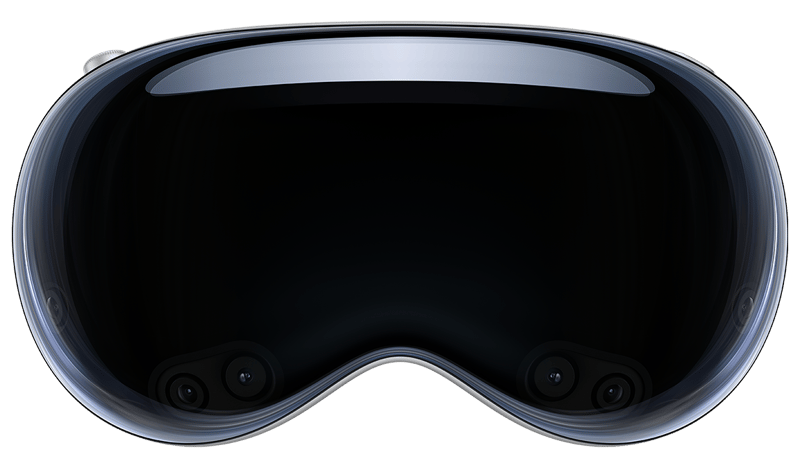

2.2. The Working Principle of Vision Pro

The working principle of Vision Pro is to capture information from the real world through a series of cameras and sensors and blend it with the virtual world to create a unique mixed-reality experience. When users put on the headset, they first see their surrounding real-world environment, and then augmented reality information is overlaid on this view. Users can navigate through this information using eye-tracking technology, such as viewing applications and making selections with a simple tap of their fingers. Thanks to external cameras, gestures are also tracked and recognized, enabling hand control functionalities.

2.3. The Design of Vision Pro

The design philosophy of Vision Pro is to integrate virtual elements into the real world, enabling users to experience effects such as a massive screen width of 100 feet. Users can adjust the screen display according to personal preferences. Moreover, content such as video programs will enhance the realism of the scene.

3. VisionOS

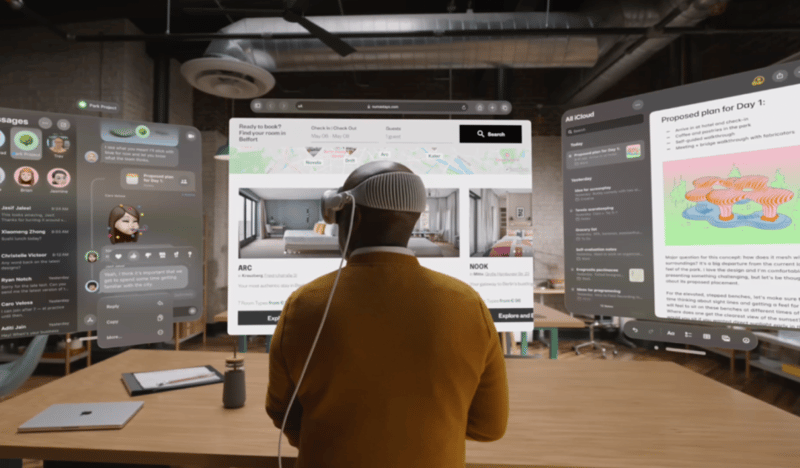

3.1. Overview of VisionOS

The Apple Vision Pro is equipped with the latest operating system, VisionOS, similar to the iOS on the iPhone, the iPadOS on the iPad, and the macOS on the MacBook. VisionOS, designed specifically for Vision Pro, is hailed as 'the world's first spatial operating system.' Unlike traditional desktop and mobile computing, spatial computing presents the working environment right before the eyes, using changes in natural light and shadows to help users understand scale and distance, making digital content appear as part of the real world. Users can interact with applications while staying connected to their surroundings or be fully immersed in a world of their creation.

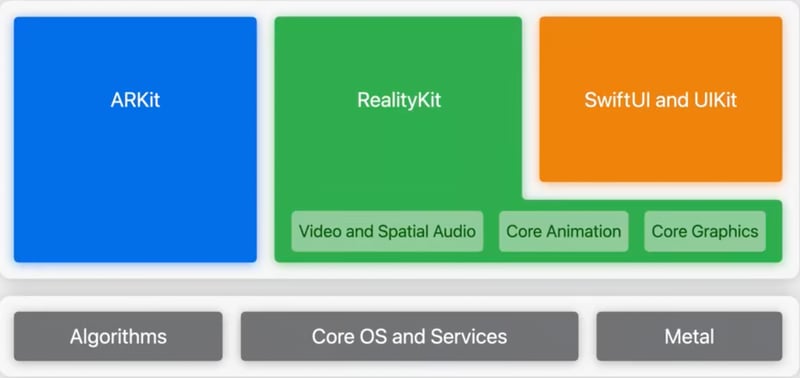

VisionOS is built on the foundation of macOS, iOS, and iPadOS, incorporating elements from iOS and spatial frameworks, a multi-app 3D engine, an audio engine, a dedicated rendering subsystem, and a real-time subsystem. At the architectural level, VisionOS shares core modules with macOS and iOS, with the added 'real-time subsystem' designed to handle interactive visual effects on the Apple Vision Pro. VisionOS will feature an entirely new App Store, and Vision Pro will also be capable of running hundreds of thousands of familiar iPhone and iPad applications.

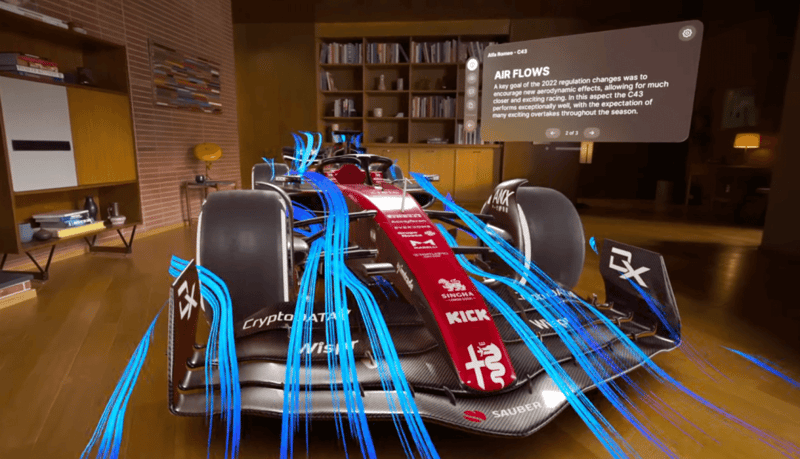

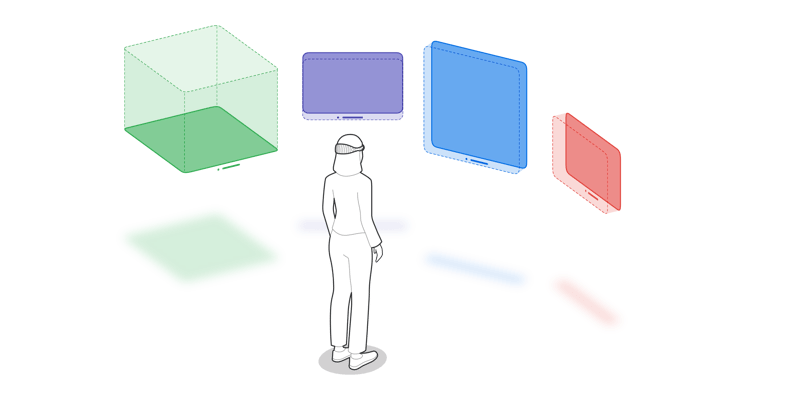

3.2. Forms of Content Presentation

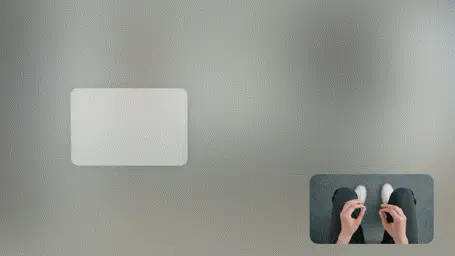

Apple Vision Pro offers an infinite spatial canvas to explore, experiment, and play, giving you the freedom to completely rethink your experience in 3D. People can interact with your app while staying connected to their surroundings, or immerse themselves completely in a world of your creation. And your experiences can be fluid: start in a window, bring in 3D content, transition to a fully immersive scene, and come right back.

The choice is yours, and it all starts with the building blocks of spatial computing in visionOS.

Key Features of Vision OS:

Windows:You can create one or more windows in your visionOS app. They’re built with SwiftUI and contain traditional views and controls, and you can add depth to your experience by adding 3D content.

Volumes:Add depth to your app with a 3D volume. Volumes are SwiftUI scenes that can showcase 3D content using RealityKit or Unity, creating experiences that are viewable from any angle in the Shared Space or an app’s Full Space.

Spaces:By default, apps launch into the Shared Space, where they exist side by side — much like multiple apps on a Mac desktop. Apps can use windows and volumes to show content, and the user can reposition these elements wherever they like. For a more immersive experience, an app can open a dedicated Full Space where only that app’s content will appear. Inside a Full Space, an app can use windows and volumes, create unbounded 3D content, open a portal to a different world, or even fully immerse people in an environment.

3.3. Key Features

3.3.1. visionOS is compatible with applications on macOS, iOS, and iPadOS

VisionOS is fundamentally an extension of iOS and iPadOS, making it easy to seamlessly integrate with the computers and phones users are already accustomed to. Users can directly view their phone or computer screen on the large display of Apple Vision Pro, work using voice commands or a physical keyboard, or use the phone's touch control as a mouse, maintaining the familiar habits of smart device usage.

3.3.2. All applications must exist in a 3D space

All visionOS applications will "float" in a 3D space, even basic 2D applications ported from iOS or iPadOS. Traditional UI elements have acquired new Z offset options, allowing developers to push panels and controls into the 3D space, making specific interface elements float in front of or behind others.

3.3.3. Hands-free interaction

Using Vision Pro is entirely hands-free, eliminating the need for additional controllers. Equipped with high-precision retinal sensors inside the glasses, the eyes themselves become extending beams of light. Wherever you look, the UI there automatically floats up.

3.3.4. Video passthrough

Thanks to a dozen cameras and precise tracking of eye movement, a fusion of VR and AR modes is achieved. By restoring and modeling the internal focus points of the eyes, a penetrative effect is created when looking at screen content or external content, integrating the software UI with the real world. By rotating a digital knob, users can adjust the level of background transparency, allowing the UI to naturally transition between full immersion and complete transparency.

3.3.5. Application development relies on existing tools

Developers will build user interfaces using SwiftUI and UIKit, display 3D content, animations, and visual effects using RealityKit, and achieve a real-world spatial understanding using ARKit. Meanwhile, Apple's collaboration with Unity allows all Unity-based content to migrate to visionOS-based applications with minimal conversion work. All these enable developers with extensive experience in existing tools to quickly create products, reducing the knowledge transfer costs for developers and facilitating the rapid promotion of products.

3.4. Interaction Methods

Visual interaction: By adopting eye-tracking technology, navigational focus is achieved through visual attention. Whichever button the user looks at enters a state of readiness; whichever window the user gazes at becomes active. From the moment the user sees an element, they can interact with it using gestures, eliminating the concept of a cursor at the GUI level.

Voice interaction: When the visual and tactile channels are occupied, voice interaction can play a role in communicating with and controlling the system. Voice is adept at flattening the menu structure, providing direct access to what the user needs.

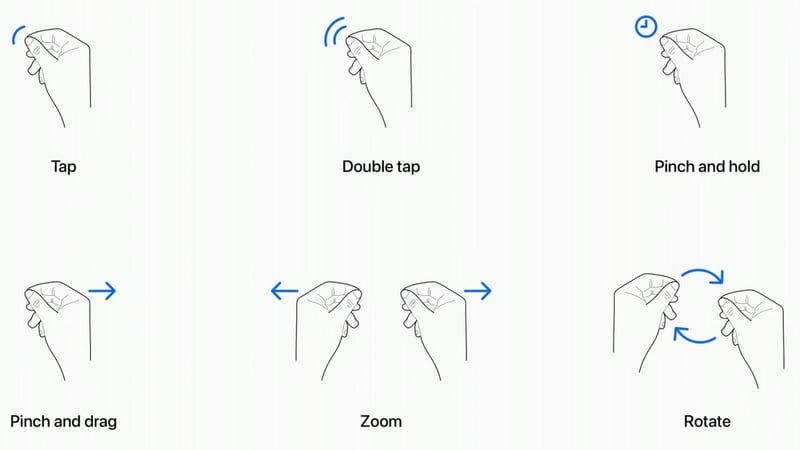

Gesture interaction: After the user selects a button with their gaze, visionOS further determines what event should be triggered based on the user's gestures. For example, when a user pinches their thumb and index finger together, the button triggers a "click" event, and when the user keeps the pinch and moves their hand, the button triggers a "drag" event. VisionOS also supports custom gestures.

After the user selects a button with their gaze, visionOS further determines what event should be triggered based on the user's gestures. For instance, when a user pinches their thumb and index finger together, the button triggers a "click" event, and when the user maintains the pinch and moves their hand, the button triggers a "drag" event.

3.5. Development Tools and Frameworks:

Developing for Vision OS requires a comprehensive set of tools and frameworks, each designed to leverage the unique capabilities of spatial computing:

SwiftUI: This is the go-to framework for building apps for Vision OS, offering a range of 3D capabilities, gesture recognition, and immersive scene types. SwiftUI integrates seamlessly with RealityKit, Apple's 3D rendering engine, offering sharp, responsive, and volumetric interfaces.

RealityKit: A powerful 3D rendering engine that supports advanced features like adjusting to physical lighting conditions, casting shadows, and building visual effects. It also utilizes MaterialX for authoring materials.

ARKit: This framework enhances spatial understanding, enabling Vision Pro apps to interact seamlessly with the surrounding environment. It provides robust APIs for Plane Estimation, Scene Reconstruction, Image Anchoring, World Tracking, and Skeletal Hand Tracking.

Vision OS Simulator: Part of the Xcode toolkit, this simulator offers diverse room layouts and lighting conditions for comprehensive testing of apps in a virtual environment.

Accessibility Features: Vision OS is designed to be inclusive, offering Pointer Control as an alternative navigation method and ensuring that apps can cater to users who rely on eye or voice interactions.

3.6. Getting Started with Development:

In the development section for Vision OS, we'll delve deeper into creating an immersive app using SwiftUI, RealityKit, and ARKit, three of the key frameworks for Vision OS development. We will outline the steps to create a simple 3D object and render it in a 3D space, demonstrating interaction with the object. At the same time, Codia AI Code supports Figma to SwiftUI Code.

-

Setting up the Project:

- Create a new project in Xcode and select the Vision OS template.

- Ensure that SwiftUI is selected as the user interface option.

-

Creating the SwiftUI View:

- Define a new SwiftUI view that will host your 3D content.

import SwiftUI import RealityKit struct ContentView: View { var body: some View { return ARViewContainer().edgesIgnoringSafeArea(.all) } } -

Creating the ARViewContainer:

- Implement an

ARViewContainerstruct that creates anARViewand adds a simple 3D object to it.

struct ARViewContainer: UIViewRepresentable { func makeUIView(context: Context) -> ARView { let arView = ARView(frame: .zero) // Add a box anchor to the scene let anchor = AnchorEntity(plane: .horizontal) let box = MeshResource.generateBox(size: 0.1) let material = SimpleMaterial(color: .red, isMetallic: true) let boxEntity = ModelEntity(mesh: box, materials: [material]) anchor.addChild(boxEntity) arView.scene.addAnchor(anchor) return arView } func updateUIView(_ uiView: ARView, context: Context) {} } - Implement an

-

Interacting with the 3D Object:

- Implement gestures to interact with the 3D object. For example, add a tap gesture to change the color of the box when tapped.

extension ARViewContainer { func setupGestures(arView: ARView) { let tapGesture = UITapGestureRecognizer(target: self, action: #selector(handleTap)) arView.addGestureRecognizer(tapGesture) } @objc func handleTap(gesture: UITapGestureRecognizer) { // Assuming boxEntity is accessible let material = SimpleMaterial(color: .blue, isMetallic: true) boxEntity.model?.materials = [material] } } -

Adding ARKit Features:

- Integrate ARKit features such as Plane Estimation or Image Anchoring if needed.

let configuration = ARWorldTrackingConfiguration() configuration.planeDetection = [.horizontal, .vertical] arView.session.run(configuration) -

Testing the App:

- Run the app on a compatible device.

- Interact with the 3D object in a real-world environment.

4. Use Cases

4.1. Immersive Learning and Education

In the realm of education, Apple Vision Pro brings learning to life. Students can explore historical sites, dissect complex organisms, or visualize mathematical concepts in three dimensions, making learning an interactive and engaging experience. It's not just about seeing; it's about experiencing and interacting, which can significantly enhance understanding and retention.

4.2. Professional Design and Visualization

For architects, interior designers, and creatives, the Apple Vision Pro offers a new dimension of visualization. Design professionals can construct and walk through 3D models of their work, making adjustments in real-time and experiencing spaces as they would be in reality. This immersive approach to design not only improves the creative process but also enhances presentation and client communication.

4.3. Enhanced Productivity and Collaboration

In the business sphere, Apple Vision Pro transforms how teams collaborate and share information. Imagine a virtual meeting room where participants, though miles apart, can interact with each other and digital content as if they were in the same physical space. Documents, designs, and data become tangible objects that can be examined and manipulated, fostering a collaborative and productive environment.

4.4. Next-Level Gaming and Entertainment

Gaming and entertainment take a quantum leap with Apple Vision Pro. Gamers can step into the worlds they play in, experiencing immersive environments and interacting with the game in a truly physical way. For movie enthusiasts, it's about being in the movie, not just watching it. The immersive experience makes every scene more intense, every storyline more gripping.

4.5. Healthcare and Therapeutic Use

The implications of Apple Vision Pro in healthcare are profound. From surgical training with lifelike simulations to therapeutic applications like guided meditation or exposure therapy, the device has the potential to revolutionize both treatment and education in the healthcare sector.

4.6. Remote Assistance and Training

Apple Vision Pro can also play a crucial role in remote assistance and training. Technical experts can guide on-site workers through complex procedures, overlaying digital instructions onto real-world tasks. This not only improves the efficiency of the task at hand but also significantly enhances the learning curve for trainees.

The Apple Vision Pro, with its versatile capabilities, is not just a new product; it's a new way of interacting with the world. Whether it's for learning, creating, collaborating, or entertaining, the device opens up new frontiers, redefining what's possible in each of these domains. As the technology evolves, so too will the use cases, paving the way for a future where the boundaries between digital and physical continue to blur.