Service discovery should be automated and dynamic: When an application container gets reallocated, or when a new application is starting, DNS resolution should pick up immediately. Discovery should be redundant and resilient, meaning each node provides DNS service and can discover all currently running services. This simplifies configuration and access. You replace fixed IP addresses with domain names. You don't need to remember which service runs on which machine, and you don’t need to know the current IP address of the node. Service discovery is essential to dynamically manage applications in your cloud.

In this article, I will show you how to setup DNS resolution within my infrastructure at home. You will learn how to provide DNS service on each node, how to use Consul for dynamic service registration and discovery, and how to extend DNS services to Docker containers so that they can use domain names for contacting each other.

Service discovery fulfills the last requirement of my infrastructure@home project:

- SD1: Applications can be accessed with a domain name independent of where they are running

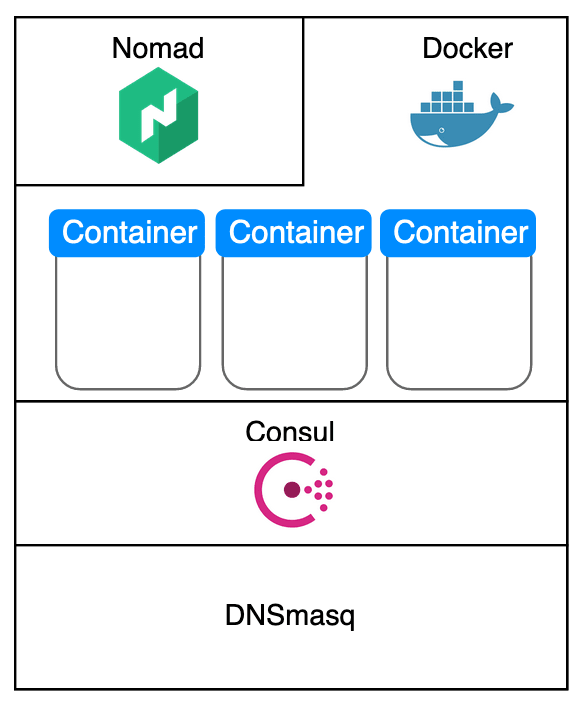

We will have the following architecture:

This article originally appeared at my blog.

Components of Cloud-Wide DNS Resolution

In order to achieve cloud-wide, redundant and resilient DNS resolution, we need to consider several components. Let’s explain the steps involved in creating, registering, accessing and stopping a service. The example is an actual use case from my project: Collecting node metrics with Prometheus and rendering them with Grafaana.

- The job scheduler executes a job definition which declares to create the Prometheus docker container

- The docker container is successfully created and reachable by its HTTP endpoint

- The job scheduler informs the service registry about the Prometheus container with its IP address, port and service name

- The job scheduler creates the Grafana Docker container

- The Grafana container accesses the data via

prometheus.infra.local - Shortly after, as a user I query

grafana.infra.localand see my dashboards - After some time, a new job is scheduled, and the Prometheus container will be deployed to another node

- The job scheduler destroys the container

- The service registry is notified about the decomissioned prometheus container - for a short amount of time, DNS resolution for

prometheus.infra.localwill not work - The new Prometheus Docker container is running, the service discovery is informed, DNS resolutions works again

The single and dynamic source of truth is the service registry. It provides DNS resolution that needs to be made available across Docker containers and to me as the user. It needs to run redundant on each node, and any changes need to be communicated to all other nodes. These features are provided by Consul.

The job scheduler is responsible for creating, stopping and moving containers. It also informs the service registry about changes. This is provided by Nomad. Consul and Nomad are integrated by including a service declaration in the job specification.

Now, how to access the DNS resolution from within a Docker?

DNS Resolution in Docker

The docker daemon provides DNS resolution to all containers. By default, it accesses the hosts DNS resolution. To provide additional DNS servers, the options are:

- A) Add DNS servers to the host´s DNS configuration

- B) Pass additional DNS servers to the docker command, like

docker --dns=192.168.1.1 run ... - C) Define additional DNS servers in the docker daemon config file

/etc/docker/daemon.json

I like to keep things simple - option A) is what we will try.

Reading the Consul documentation, following information is important: For security reasons, the Consul service should not be able to bind to loalhost:53, the local DNS port. Instead, the local DNS server should be configured to use Consuls DNS resolutions. This can be done with DNS resolvers like dnsmasq and bind, or by manipulating IP tables.

At the end, I decided to use DNSmasq. Only one configuration line needs to be added to /etc/dnsmasq.conf.

server=/consul/localhost#8600

This one-line entry gives us service and node-name DNS resolution!

It works locally:

dig prometheus.service.consul

; <<>> DiG 9.11.5-P4-5.1-Raspbian <<>> prometheus.service.consul

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 34410

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;prometheus.service.consul. IN A

;; ANSWER SECTION:

prometheus.service.consul. 0 IN A 192.168.2.203

;; Query time: 3 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sun Mar 15 12:07:35 GMT 2020

;; MSG SIZE rcvd: 70

And it works in Docker too.

>> docker run busybox ping prometheus.service.consul

PING prometheus.service.consul (192.168.2.203): 56 data bytes

64 bytes from 192.168.2.203: seq=0 ttl=63 time=0.414 ms

64 bytes from 192.168.2.203: seq=1 ttl=63 time=0.600 ms

64 bytes from 192.168.2.203: seq=2 ttl=63 time=0.592 ms

Accessing Services as a User

The last part is to configure a convenient way for users to query our webservices. When you are literally sitting next to your cluster, then this is no problem: Just add the configured local DNS nameservers to your client machine. But there is one challenges: You need to know the port on which the service runs. If its static, you can add it manually. In a truly dynamic environment, port allocations are dynamic as well. So, we need another component that translates domain names to internal IP addresses and ports.

This is the task of an edge router. This router resides between your LAN and the cluster. It will receive all incoming requests, access the local DNS servers and service registry, and forward the traffic to the target address and port.

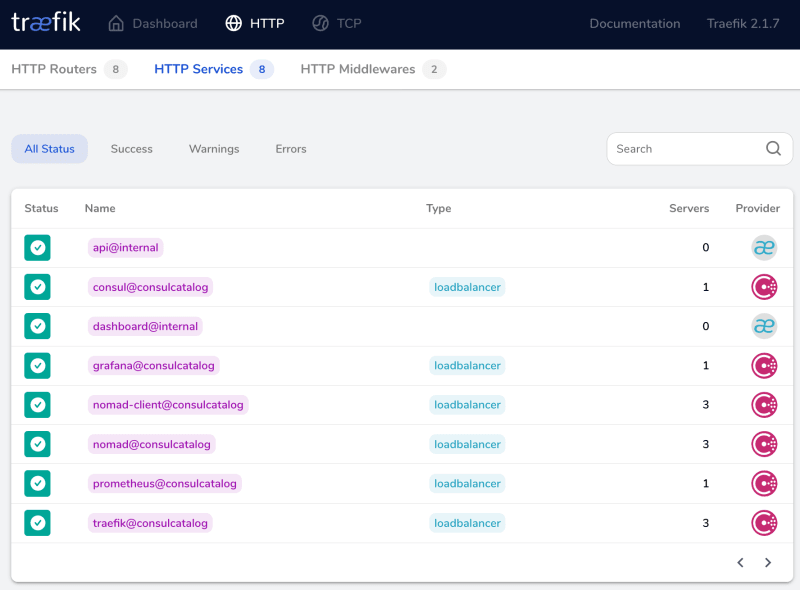

I'm choosing Traefik for this role. Traefik also provides a dashboard to show its configured routers and services.

I'm running Traefik as a Docker container scheduled with Nomad. For testing purposes, I explicitly start this container on a well-known server. Traefik will use Consul as the single and dynamic source of truth about all services. Here is the relevant part from the Nomad job declaration.

group "traefik" {

affinity {

attribute = "${node.unique.name}"

value = "raspi-4-1"

weight = 100

}

task "traefik" {

template {

destination = "local/config.yml"

data = <<EOH

providers:

consulCatalog:

endpoint:

address: http://localhost:8500

api:

insecure: true

dashboard: true

EOH

}

}

...

}

Traefik loads, and we can see the Consul services exposed in the dashboard.

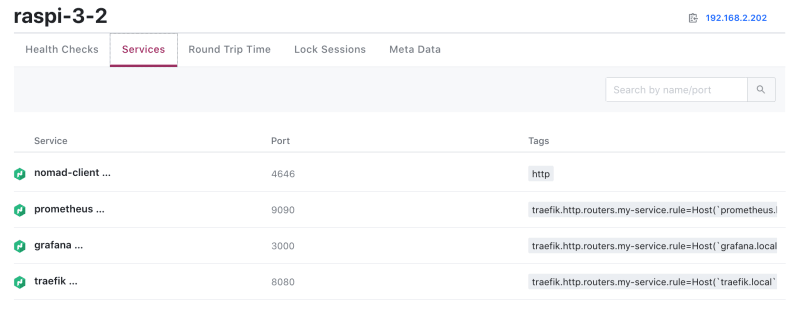

In Nomad, I declare Traefic-specific tags to specify the domain name of each service.

service {

tags = ["traefik.http.routers.grafana.rule=Host(`grafana.local`)"]

}

Now, each service has a well-defined name.

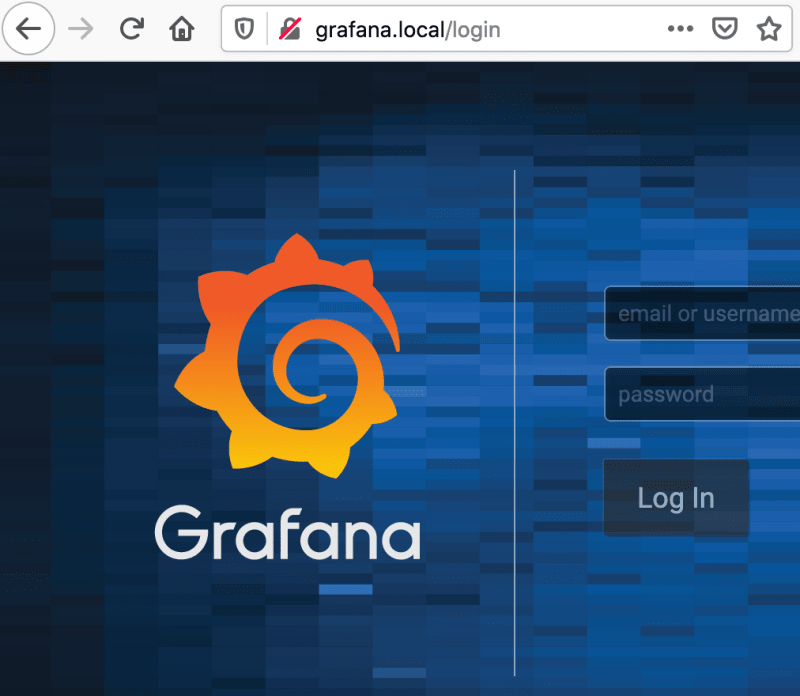

After adding the IP address of the node on which Traefik runs, I can finally access my services with only domain names in my browser:

Mission accomplished!

Conclusion

Service discovery is a tough challenge. It should be easy for any service and you, as the user, to just use a domain name like prometheus.infra.local. To achieve this, you need to consider the dynamics: Containers can start at any node, the endpoints have a dynamic IP. And ideally you want service discovery to be redundant and resilient: If one node goes down, all remaining services can still reach each other.

I showed how to achieve this with Consul, Traefik and DNSmasq:

- Consul is the service registry, knowing which services run on which nodes and their dynamic port

- DNSmasq is the services DNS resolver, enabling Docker containers to find each other

- Trafik is the users DNS resolver, mapping domain names to IP addresses and ports