This is a Plain English Papers summary of a research paper called Unlocking language models' potential through synthetic pretraining. If you like these kinds of analysis, you should join AImodels.fyi or follow me on Twitter.

Overview

- Synthetic continued pretraining is a novel approach to improve the performance of language models.

- It involves fine-tuning a pre-trained model on synthetic data to further enhance its capabilities.

- The paper explores the benefits and challenges of this technique, providing insights for researchers and practitioners.

Plain English Explanation

Language models are powerful AI systems that can understand and generate human-like text. However, their performance can be limited by the data they are trained on. Synthetic continued pretraining proposes a way to overcome this by fine-tuning a pre-trained model on synthetic data - text that is artificially generated to mimic real-world language.

The key idea is that by exposing the model to this synthetic data, it can learn additional patterns and nuances that were not present in the original training data. This can help the model better understand and produce more natural-sounding language, improving its overall performance on a variety of tasks.

The paper explores different approaches to generating the synthetic data, such as using language models themselves to create realistic-looking text. It also examines how this technique can be applied to improve the performance of models trained on translated text, which often lacks the fluency of text written by native speakers.

Overall, synthetic continued pretraining offers a promising way to enhance language models and unlock new capabilities, paving the way for more advanced and human-like AI systems.

Technical Explanation

The paper "Synthetic continued pretraining" investigates the use of synthetic data to further improve the performance of pre-trained language models. The key idea is to fine-tune a model that has already been trained on a large corpus of natural language data (such as books, websites, or dialog) on an additional dataset of synthetic text.

The authors explore different approaches to generating this synthetic data, including using language models themselves to produce realistic-looking text. They find that exposing the pre-trained model to this synthetic data can lead to significant improvements in its performance on a variety of language understanding and generation tasks.

One interesting application explored in the paper is using synthetic continued pretraining to enhance models trained on translated text. Since translated text often lacks the natural fluency of text written by native speakers, the authors show that fine-tuning on synthetic data can help the model better capture the nuances and patterns of natural language.

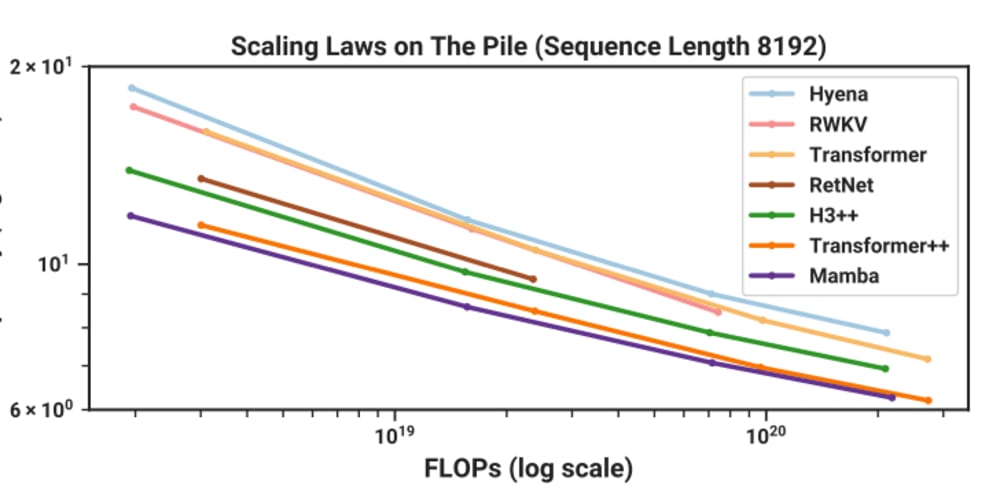

The paper provides a detailed experimental evaluation, comparing the performance of models trained with and without synthetic continued pretraining on benchmark datasets. The results demonstrate the effectiveness of this technique across different model architectures and task domains.

Critical Analysis

The paper presents a compelling approach to improving language models, but it also acknowledges several potential limitations and areas for further research.

One key challenge is the ability to generate high-quality synthetic data that truly captures the complexity and subtlety of natural language. While the authors explore various techniques, they note that further advancements in text generation may be necessary to fully unlock the potential of this approach.

Additionally, the paper does not delve deeply into the potential biases or unintended consequences that could arise from fine-tuning on synthetic data. Researchers have raised concerns about the risks of over-relying on synthetic data, such as the amplification of existing biases or the introduction of new ones.

Further investigation is also needed to understand the optimal strategies for incorporating synthetic data into the training process, as well as the long-term effects on model robustness and generalization.

Conclusion

The "Synthetic continued pretraining" paper presents a promising approach to enhancing the performance of language models by fine-tuning on synthetic data. This technique offers the potential to unlock new capabilities and improve the fluency and naturalism of AI-generated text, with applications across a wide range of domains.

While the paper provides a solid technical foundation and experimental results, it also highlights the need for further research to address the challenges and potential risks associated with this approach. As the field of language model development continues to evolve, the insights and techniques presented in this work can serve as a valuable contribution to the ongoing efforts to build more advanced and human-like AI systems.

If you enjoyed this summary, consider joining AImodels.fyi or following me on Twitter for more AI and machine learning content.