This is a Plain English Papers summary of a research paper called KANs vs MLPs: A Fair Comparison of Capabilities and Performance. If you like these kinds of analysis, you should join AImodels.fyi or follow me on Twitter.

Overview

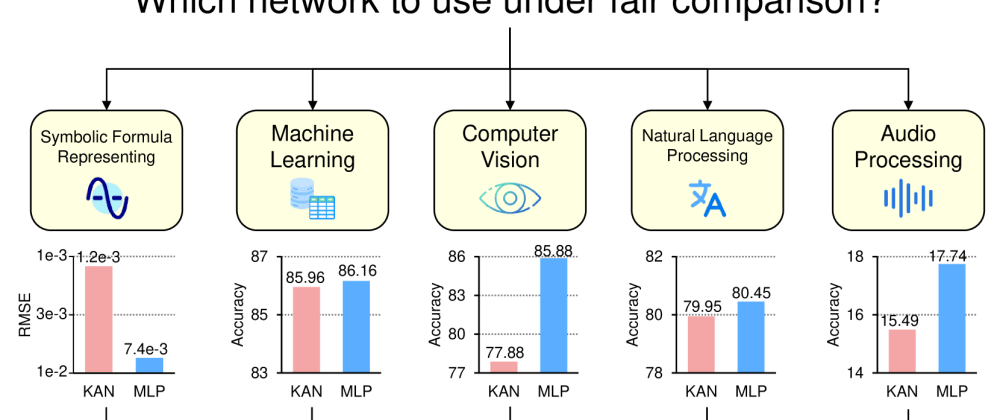

- Compares the performance of Kolmogorov-Arnold Networks (KANs) and Multi-Layer Perceptrons (MLPs) on various tasks

- Aims to provide a fair comparison by controlling for model capacity and other factors

- Examines the number of parameters, training, and test performance of the two models

Plain English Explanation

The paper compares two types of neural network models: Kolmogorov-Arnold Networks (KANs) and Multi-Layer Perceptrons (MLPs). KANs are a newer type of model that claim to have certain advantages over traditional MLPs.

To make a fair comparison, the researchers ensured that the KAN and MLP models had the same number of total parameters. This allowed them to isolate the impact of the model architecture, rather than differences in model size. They then trained and evaluated the models on various tasks to see how they performed.

The key findings are that the KANs and MLPs generally performed similarly in terms of training and test accuracy. The paper concludes that the two models are more comparable than previous research had suggested, and that the choice between them may come down to practical considerations like ease of training or interpretability, rather than a large performance gap.

Technical Explanation

The paper compares the performance of Kolmogorov-Arnold Networks (KANs) and Multi-Layer Perceptrons (MLPs) on a variety of tasks. KANs are a recently proposed neural network architecture that claim to have certain advantages over traditional MLPs.

To ensure a fair comparison, the researchers carefully controlled the number of parameters in the KAN and MLP models. They did this by adjusting the depth and width of the networks to match the total parameter count. This allowed them to isolate the impact of the model architecture, rather than differences in model capacity.

The paper then evaluates the training and test performance of the KAN and MLP models on several datasets and tasks, including tabular data, computer vision, and time series forecasting. The results show that the two model types generally perform quite similarly, with no large gaps in accuracy.

The authors conclude that KANs and MLPs are more comparable than prior research had suggested. The choice between the two may come down to practical considerations like ease of training or interpretability, rather than clear performance advantages of one over the other.

Critical Analysis

The paper provides a thorough and fair comparison of KANs and MLPs. By carefully controlling for model capacity, the authors are able to isolate the impact of the architectural differences between the two model types.

However, the paper does not explore the potential computational or training efficiency advantages of KANs, which are often cited as a key benefit of the architecture. The authors only focus on final task performance, and don't investigate aspects like training speed or inference latency.

Additionally, the paper only examines a limited set of tasks and datasets. It would be valuable to see if the findings hold true across a wider range of problem domains and data types.

Lastly, the paper does not delve into the underlying reasons why KANs and MLPs may perform similarly. Investigating the representational capacities and learning dynamics of the two architectures could provide deeper insights.

Overall, the paper makes a valuable contribution by providing a comprehensive and rigorous comparison of KANs and MLPs. But there are still opportunities for further research to fully understand the strengths, weaknesses, and trade-offs between these two neural network models.

Conclusion

This paper presents a detailed comparison of Kolmogorov-Arnold Networks (KANs) and Multi-Layer Perceptrons (MLPs), two prominent neural network architectures. By carefully controlling for model capacity, the researchers were able to isolate the impact of the architectural differences between the two models.

The key finding is that KANs and MLPs generally perform quite similarly in terms of training and test accuracy, contrary to some previous research. This suggests that the choice between the two models may come down to practical considerations like ease of training or interpretability, rather than clear performance advantages.

The paper makes an important contribution by providing a fair and comprehensive comparison of these two neural network models. While further research is needed to fully understand their strengths and weaknesses, this work helps to inform the ongoing debate around the merits of KANs versus traditional MLPs.

If you enjoyed this summary, consider joining AImodels.fyi or following me on Twitter for more AI and machine learning content.