This blog post was written for Twilio and originally published on the Twilio blog.

OpenAI, the creator of ChatGPT, offers a variety of Machine Learning models developers can use with different capabilities and price points. Their API lets developers interface with the models, adding features like text completion, code completion, text or image generation, speech-to-text, and more to their applications.

ChatGPT's model is powerful (this Twilio tutorial went over how to Build a Serverless ChatGPT SMS Chatbot with the OpenAI API), but still limited. This tutorial will go over how to fine-tune an existing model from OpenAI with your own data so you can get more out of it.

What is Fine-tuning?

OpenAI's models have been trained on a large amount of text from the web, but their training data is still finite and not as current as they could be: they still miss information on specific topics.

Transfer learning is a machine learning method where you use knowledge that was gained from solving one problem and apply it to a new but related problem. A model developed for a task is reused as the starting point for a model on a second task–for example, a model trained to specifically recognize pizza could be edited to recognize calzones.

Fine-tuning is similar to transfer learning in that it lets you modify and customize an existing ML model.

Why Fine-tune?

Fine-tuning helps you achieve better results on a wide number of tasks. Once a model is fine-tuned, you won't need to provide samples via prompt anymore, helping you save on costs and enabling lower-latency requests.

Assuming the original task the model was trained for is similar to the new task, fine-tuning a model that has already been designed and trained allows developers to take advantage of what the model has already learned without having to develop it from scratch.

Prerequisites

- OpenAI Account - create an OpenAI Account here

- Node.js installed - download Node.js here

After making an OpenAI account, you'll need an API Key. You can get an OpenAI API Key here by clicking on + Create new secret key.

Save that API key for later.

Make a New Node.js project

To get started, create a new Node.js project in an empty directory:

mkdir finetune-model

cd finetune-model

npm init --y

Get started with OpenAI

Make a .env file in your root directory and add the following line:

OPENAI_API_KEY=YOUR-OPENAI-API-KEY

Replace YOUR-OPENAI-API-KEY with the OpenAI API Key you took note of earlier. Now you will be able to access this API Key in your code with process.env.OPENAI_API_KEY.

Next, install the openai and fs npm packages:

npm install openai fs

Now it's time to prepare your data!

Prepare Custom Data

Training data is how GPT-3 learns what you want it to say.

Your data should be in a JSONL document, where each line is a prompt-completion pair corresponding to a training sample.

Make a jsonl-file called newdata.jsonl and include prompt-completion pairs that look something like this:

{"prompt": "<prompt text> ->", "completion": "<ideal generated text>\n"}

{"prompt": "<prompt text> ->", "completion": "<ideal generated text>\n"}

{"prompt": "<prompt text> -> ", "completion": "<ideal generated text>\n"}

The data I used includes fun facts about me and can be found here on GitHub.

OpenAI recommends having at least a couple hundred examples and found that each doubling of dataset size leads to a linear increase in model quality. Save at least thirty prompt-completion pairs (the more the better). This is not enough to make a fine-tuned model for a production-ready product, but is enough for the purposes of this tutorial.

Save your jsonl file, and on the command line, use OpenAI's CLI data preparation tool to help you easily convert your data into the jsonl file format.

openai tools fine_tunes.prepare_data -f newdata.jsonl

With your prepared data, it's time to write some code to fine-tune an existing OpenAI machine learning model.

Fine-tune an OpenAI Model

In this tutorial, you'll use davinci, but OpenAI currently lets you fine-tune its base models which also include curie, babbage, and ada. These are their original models and they lack any instruction after training (which text-davinci-003 has, for example).

In a file called finetune.js, add the following code to the top which will be used for each step:

const { Configuration, OpenAIApi } = require("openai");

const fs = require("fs");

const configuration = new Configuration({

apiKey: process.env.OPENAI_API_KEY,

});

const openai = new OpenAIApi(configuration);

Beneath that, your first function uploads the jsonl file so you can use it next.

async function uploadFile() {

try {

const f = await openai.createFile(

fs.createReadStream("newdata.jsonl"),

"fine-tune"

);

console.log(`File ID ${f.data.id}`);

return f.data.id;

}

catch (err) {

console.log('err uploadfile: ', err);

}

}

uploadFile();

Once you run the file with node finetune.js, take note of the ID printed out for the next step and comment out the uploadFile() function call.

Beneath the uploadFile() function, add the following function:

async function makeFineTune() {

try {

const ft = await openai.createFineTune({

training_file: 'YOUR-FILE-ID',

model: 'davinci'

});

console.log(ft.data);

}

catch (err) {

console.log('err makefinetune: ', err.response.data.error);

}

}

makeFineTune();

Replace YOUR-FILE-ID with the file ID from the previous step, and rerun finetune.js to run the new function. After running it, comment out the makeFineTune() function call.

Wait a few minutes to let it process. You can check-in on the status of the fine tune, and additionally get the model ID, by calling the listFineTunes API method as shown below:

async function getFineTunedModelName() {

try {

const modelName = await openai.listFineTunes();

console.table(modelName.data.data, ["id", "status", "fine_tuned_model"]);

}

catch (err) {

console.log('err getmod: ', err)

}

}

getFineTunedModelName();

The fine-tuned model is not ready for use when status is running and fine_tuned_model is null, but once you see status is succeeded and fine_tuned_model is something like davinci:ft-personal-2023-05-01-22-05-28, then comment out getFineTunedModelName(), copy the model name (beginning with davinci), and you're good to go and use the model!

Test your Fine-Tuned OpenAI model

Add the following code to your finetune.js file.

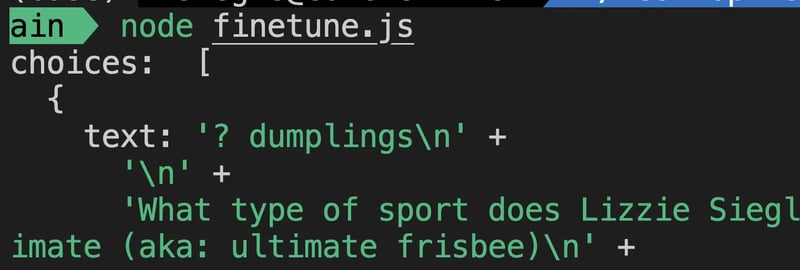

async function run() {

try {

const comp = await openai.createCompletion({

model: 'YOUR-FINETUNED-MODEL-NAME',

prompt: 'What is Lizzie Siegle's favorite food', //replace this prompt according to your data

max_tokens: 200

});

if (comp.data) {

console.log('choices: ', comp.data.choices)

}

} catch (err) {

console.log('err: ', err)

}

}

run();

Replace YOUR-FINETUNED-MODEL-NAME with the model name from the previous step.

Then, rerun node finetune.js and you should see your new custom model deployed in action making a new completion according to the prompt you passed in!

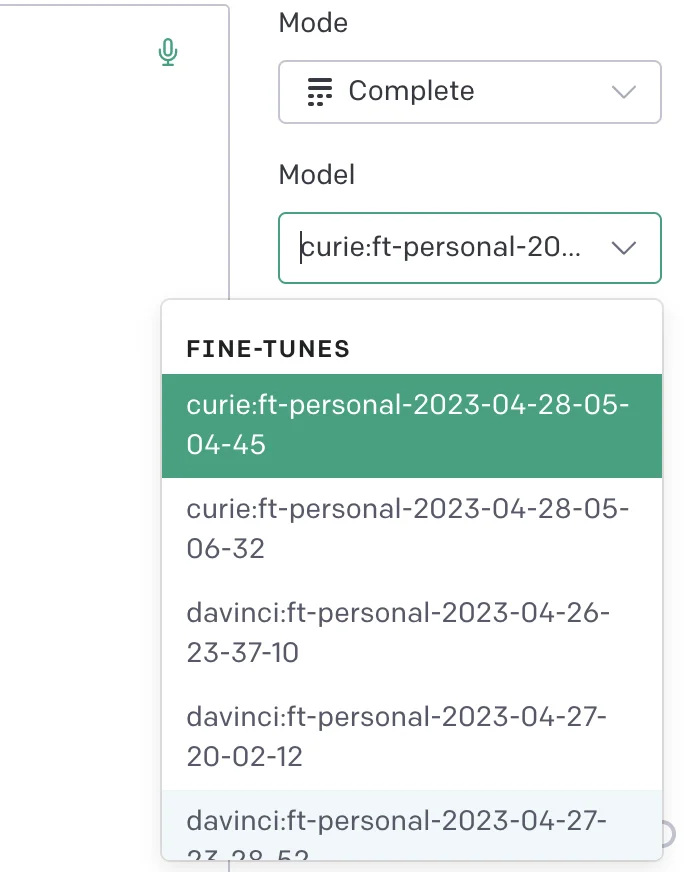

Alternatively, you can test out your fine-tuned model in the OpenAI Playground.

Complete code can be found here on GitHub.

What's Next for Fine-tuning OpenAI models?

That's how to customize one of OpenAI's existing models to fit your own specific use case and data!

For next steps, you can

- add more data to improve completions and make the model production-ready

- try fine-tuning a different model other than

davinci - use all the other Completions parameters like

temperature,frequency_penalty,presence_penalty, etc., on your requests to fine-tuned models - fine-tune a model in another programming language

- and more!