Chaos Engineering — How to safely inject failure?

Answering questions from my webinar

I recently did a two-hour webinar dedicated to chaos engineering and got a lot of great questions from the audience. In this mini-series of posts, I take some time to answer them.

If you missed the webinar, you could access it on-demand from the link below. And if you have questions you would like me to address, feel free to ask me directly on Twitter :-)

Dev Connect - Applying chaos engineering principles for building fault-tolerant applications

These are some of the question I was asked:

Is it good practice to conduct a test for the whole system at once or segregate tests?

Is it best to do tests in a “copy-of-prod” like environment?

Is there a structured approach to experiment safely?

How do you experiment without risking breaking production?

How can chaos testing be conducted for Lambda (or serverless) services?

If I understand correctly, the synthesized question is:

“How to deploy chaos experiments and safely inject failure in your environment.”

Notice the deliberate usage of the word deploy.

That is probably the most important question out there. And the answer is simple;

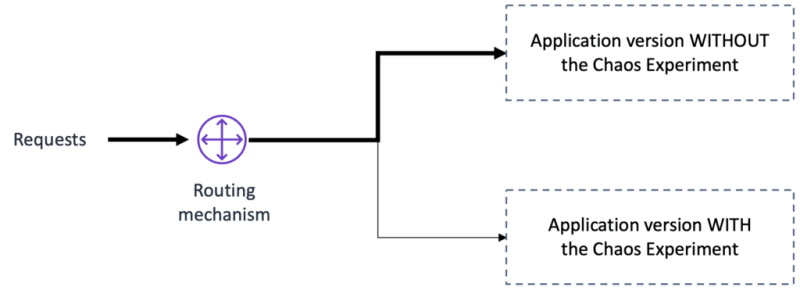

The safest way to inject failure in the environment is by using the canary deployment pattern .

Yes! And it is one of the essential things about experimenting safely:

Chaos engineering experiments should be treated as a deployment pattern.

The difference with a traditional deployment is that once the experiment is over, we bring back the initial environment — in other words, we rollback the experiment.

Let’s rewind a bit — What is canary (deployment) experiment?

The canary deployment pattern is a technique used to reduce the risk of failure when new versions of applications enter production by creating a new environment with the latest version of the software. You then gradually roll out the change to a small subset of users, slowly making it available to everybody if no deployment errors are detected.

The canary deployment pattern is one of the basic design in immutable infrastructure, a model by which no updates, security patches, or configuration changes happen “in-place” on production systems. If any change is needed, a new version of the architecture is built and deployed instead.

Immutable infrastructures are more consistent, reliable, and predictable , and they simplify many aspects of software development and operations by preventing common issues related to mutability. Learn more about immutable infrastructures here.

Why is the canary pattern important for chaos engineering experiments?

First, by isolating the chaos experiment from the primary production environment and progressively ramping up the traffic sent to it, you can better control the potential blast radius of failure.

Second, having a dedicated environment to run your experiment makes it easier to deal with logs and monitoring information.

Third, you can gradually increase the percentage of requests handled by the new canary chaos experiment and rollback if errors are detected. It gives us near-instant rollback — the big red button.

Finally, you can more easily control what traffic is sent to the canary running the chaos experiment, further limiting the potential risk of customer nuisance.

Consider several routing or partitioning mechanisms for your experiment:

- Internal teams vs. customers

- Paying customers vs. non-paying customers

- Geographic-based routing

- Feature flags (FeatureToggle)

- Random

How to do canary chaos experiments on AWS?

Following are my favorite ways:

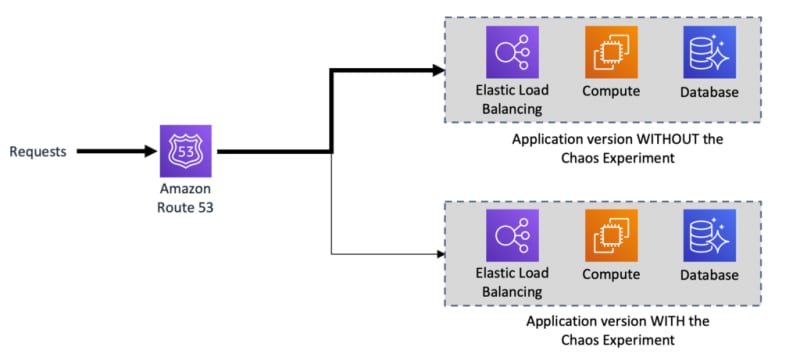

(1) Canary with Route 53 weighted routing policy

Route 53 lets you use a weighted routing policy to split the traffic between the different versions of the application you are deploying, one with the experiment, the other one without.

Weighted routing enables you to associate multiple resources with a single domain name or subdomain name and choose how much traffic is routed to each resource.

To configure weighted routing for your canary chaos experiment, you assign each record a relative weight that corresponds with how much traffic you want to send to each resource — one of the resources being the application with the chaos experiment. Route 53 sends traffic to a resource based on the weight that you assign to the record as a proportion of the total weight for all records.

For example, if you want to send a tiny portion of the traffic to one resource and the rest to another resource, you might specify weights of 1 and 255. The resource with a weight of 1 gets 1/256th of the traffic (1/1+255), and the other resource gets 255/256ths (255/1+255).

To me, it is probably the simplest and safest way to deploy your chaos experiment since it separates the experiment from the rest of the production environment.

The downside is that since you have to duplicate the entire environment, it is also the more expensive option.

Note: The speed of the rollback is directly related to the DNS TTL value. So, watch out for the default TTL values, and shorten them.

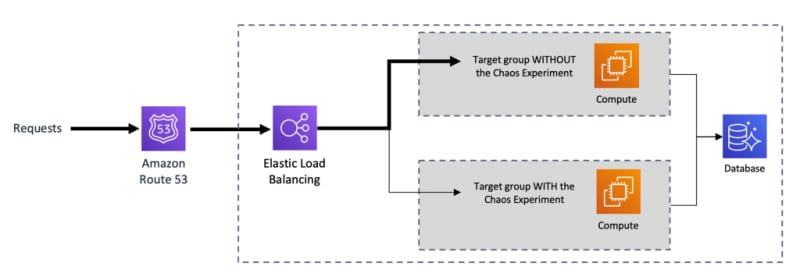

(2) Canary with Application Load Balancer and Weighted Target Groups

When creating an Application Load Balancer (ALB), you create one or more listeners and configure listener rules to direct the traffic to one target group. A target group tells a load balancer where to direct traffic to, e.g., EC2 instances, Lambda functions, etc.

To do canary chaos experiments with the ALB, you can use forward actions to route requests to one or more target groups. If you specify multiple target groups for forward action, you must specify a weight for each target group.

Each target group’s weight is a value from 0 to 999. Requests that match a listener rule with weighted target groups are distributed to these target groups based on their weights. For example, if you specify two target groups, one with a weight of 10 and the other with a weight of 100, the target group with a weight of 100 receives ten times more requests than the other target group.

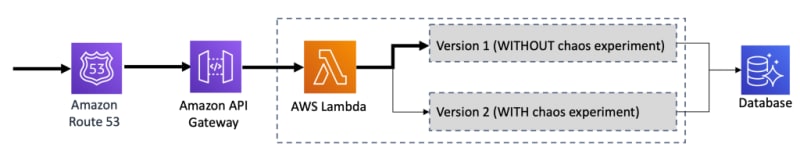

(3) Canary with API Gateway release deployments

For your serverless applications, you have the option of using Amazon API Gateway since it supports canary release deployments.

Using canaries, you can set the percentage of API requests that are handled by new API deployments to a stage. When canary settings are enabled for a stage, API Gateway will generate a new CloudWatch Logs group and CloudWatch metrics for the requests handled by the canary deployment API. You can use these metrics to monitor the performance and errors of the new API and react to them.

Please note that currently, API Gateway canary deployment only works for REST APIs, not the new HTTP APIs.

(4) Canary with AWS Lambda alias traffic shifting

The second option for serverless applications is by using the AWS Lambda alias traffic shifting feature. Update the version weights on a particular alias, and the traffic will be routed to new function versions based on the specified weight. You can easily monitor the health of that new version using CloudWatch metrics for that alias and rollback if errors are detected.

AWS CodeDeploy can help using this feature as it can automatically update function alias weights based on a predefined set of preferences and automatically rollback if needed. Check out the AWS SAM or the serverless.com framework integration with CodeDeploy to automate alias traffic shifting.

Wrapping up

Using the canary pattern to perform chaos engineering experiments is a great way to deploy and gain confidence in your experiment, control the potential blast radius of its failure, have a fast rollback , and better understand its impact on the application.

Of course it is not the only option, but it is the safest.

More reading about chaos engineering:

The Chaos Engineering Collection

- Adrian