Here is a quick test with a pattern that may hurt some databases: on a fixed set of rows (one thousand for example), you increment a counter. YugabyteDB reads the row and appends the new version of the column value into the tablet's MemTable. This is much simpler than traditional databases like PostgreSQL, which has to copy the whole row, or Oracle, which has to copy the old value. The MVCC (Multi-Version Concurrency Control) implementation in YugabyteDB is adding the versions into each key. This would generate bloat on traditional B-Trees but YugabyteDB stores tables and indexes into LSM-Trees.

Given the small size, the recent values stay in RAM: the latest changes are in the MemTable, others in SST files but they are small and stay in the buffer cache. If you have a lot of counters the automatic sharding will keep them into different MemTables.

That's all for the foreground activity the user is waiting on.

In the background, the MemTable with the old versions is flushed to disk, to SST Files, so that long queries with a read time in the past can still do consistent reads from the previous versions. Those SST files accumulates each time a MemTable is full of past versions, but a background process compacts them, keeping the intermediate version only for the required retention (timestamp_history_retention_interval_sec which defaults to 15 minutes). Then to total reaches a size that should not grow anymore.

As an example I've run the following that updates continuously 100000 counters every 5 seconds.

create table counter(name text primary key, value bigint default 0);

insert into counter(name) select generate_series(1,100000);

select pg_size_pretty(pg_table_size('counter')) \;

explain (analyze, costs off, dist) update counter set value=value+1 ;

\watch 0.001

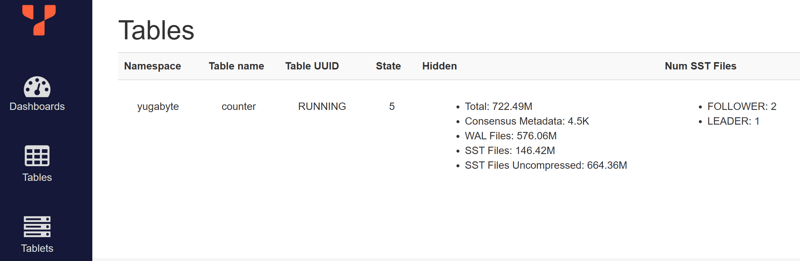

After 13 hours, I looked at the size:

The 5 SST files stay around 5 files, the trigger for compaction (rocksdb_level0_file_num_compaction_trigger), and 150MB, the compressed size of past versions to keep (timestamp_history_retention_interval_sec).

The WAL stays at 600MB, keeping 15 minutes of changes (timestamp_history_retention_interval_sec) in case a replica is temporarily down and has to re-synchronize.

I've also updated my YugabyteDB Lab with Grafana for this scenario, running:

docker compose --env-file demo-counter.env up -d --scale updates=1 --scale inserts=0

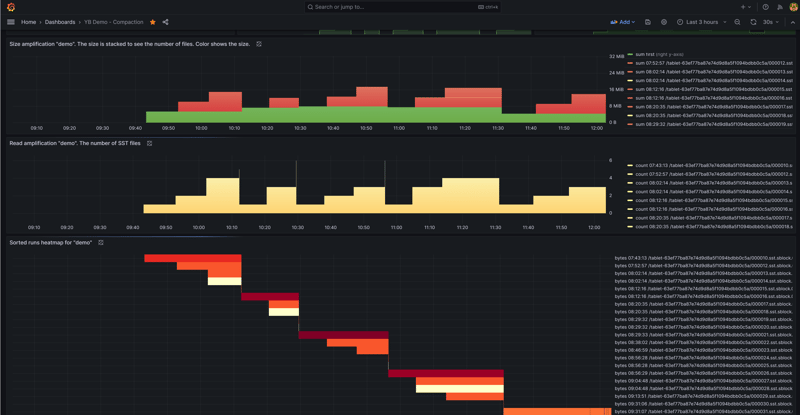

We can see the compaction happening, reducing the number of files as well as the total disk space:

The first pane is the size of the files, the second pane is the file count. After a few compaction, the number of files was down to one. The third pane shows each file with its lifetime (x-axis) and its size (color):

At some points, the files are compacted to one, smaller, which proves that there's no need for full compaction in this case. The space and read amplification are managed in the background by YugabyteDB