From a cost perspective, the Oracle Cloud could be an interesting platform for a distributed database like YugabyteDB. Because OKE, the managed kubernetes, works well and because the network cost between Availability Domains, within a region, is cheap. But saying it is one thing, let's try it. I have some cloud credits that will expire soon, let's use them to build and run a YugabyteDB database on OKE. This is a multi-part blog post. This first part is about the Kubernetes cluster creation

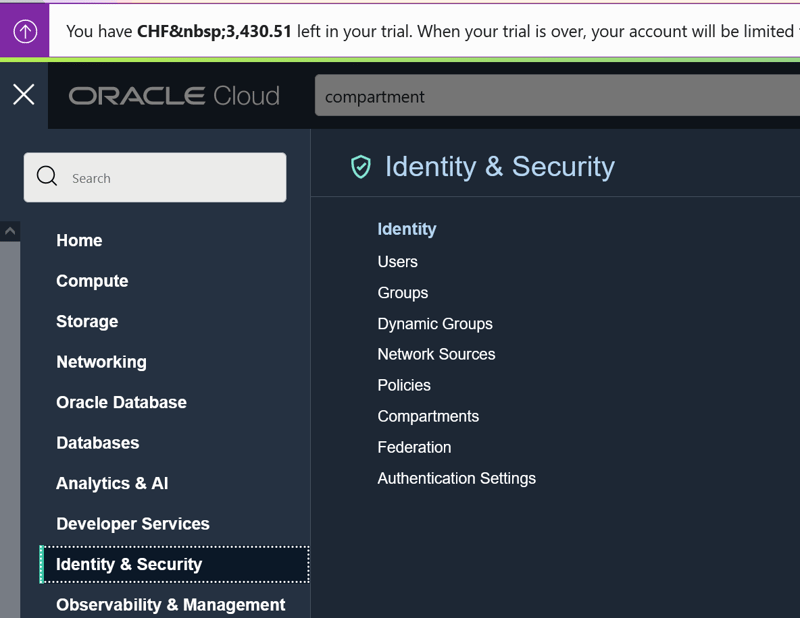

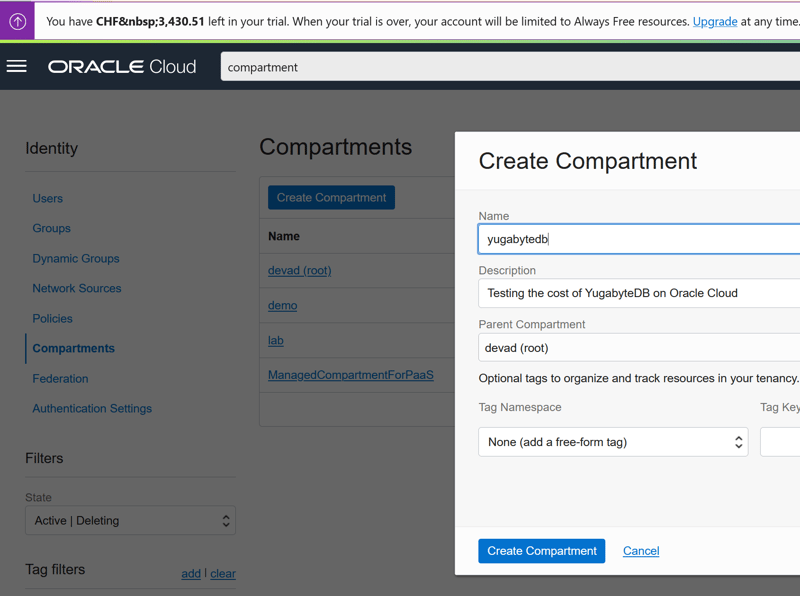

Compartment

In order to control the costs, I create a compartment for it:

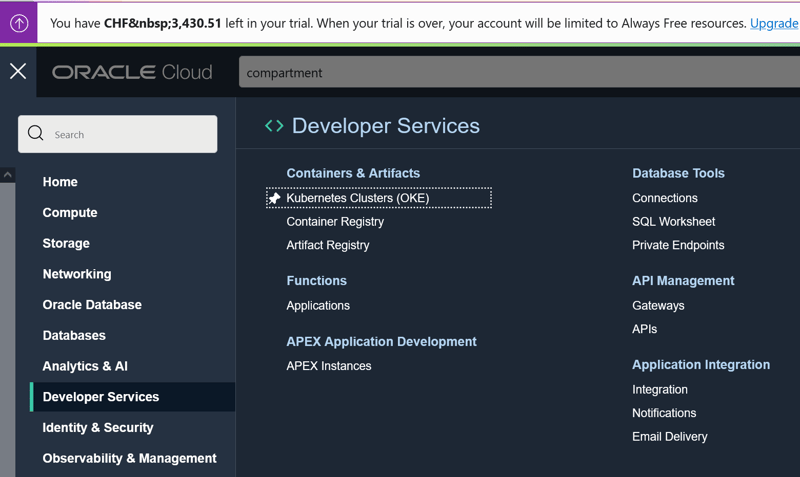

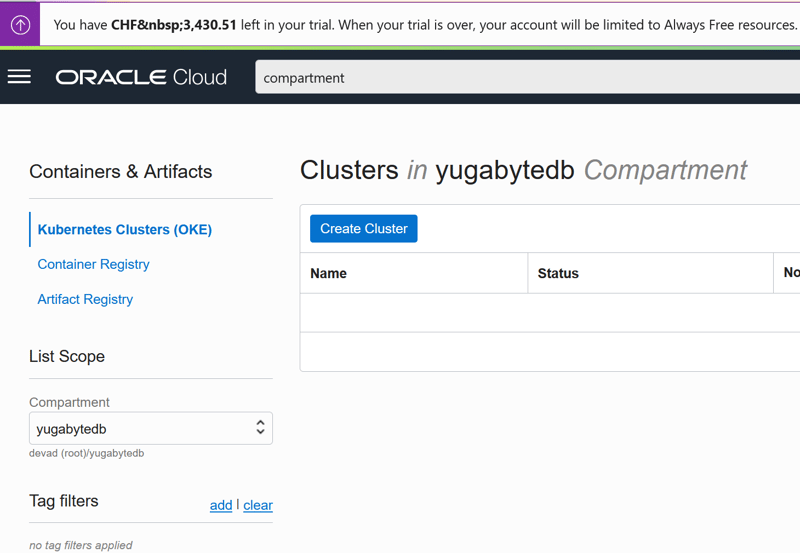

Kubernetes

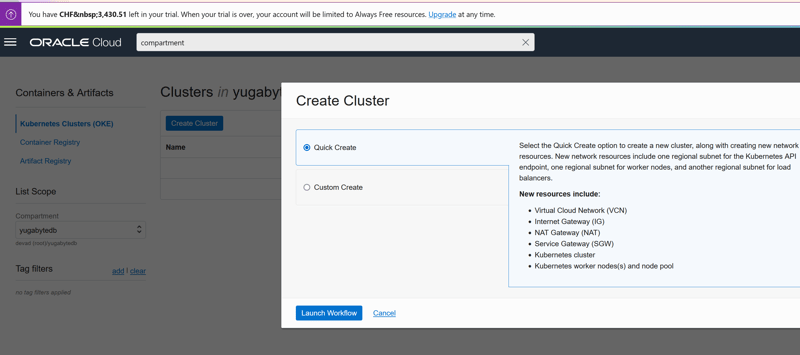

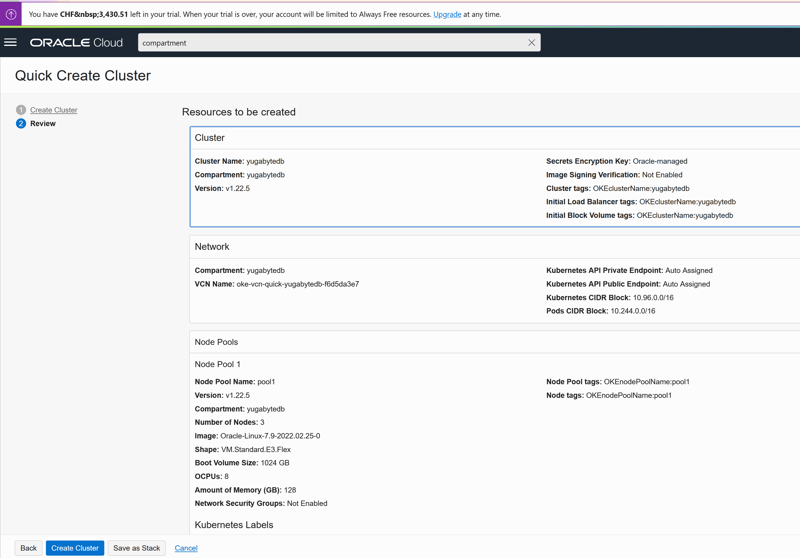

I'm using the "quick create" option

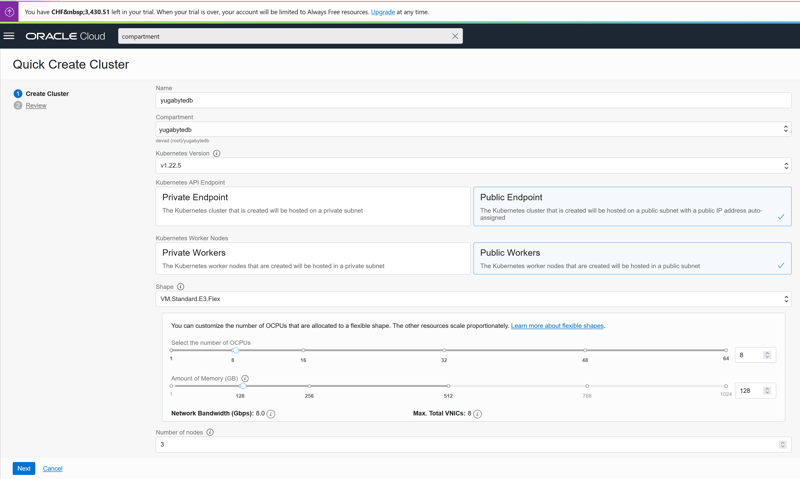

I'm creating a node pool with 3 nodes at 16 vCPU (8 OCPU)

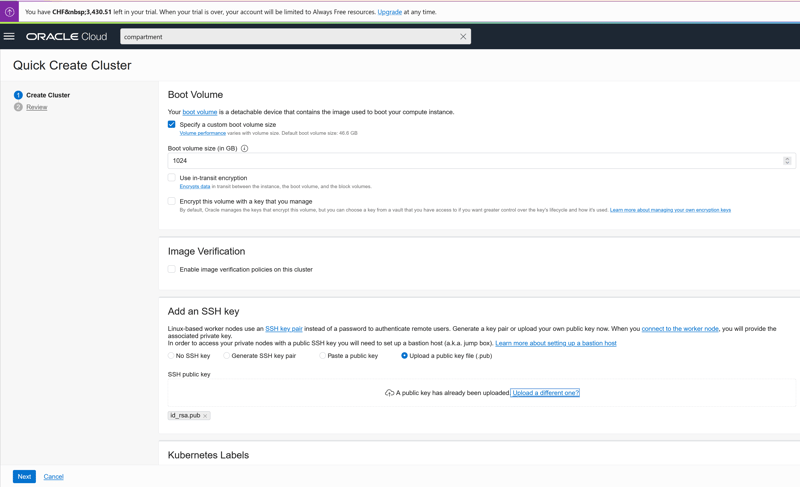

and 1TB storage

With all other defaults

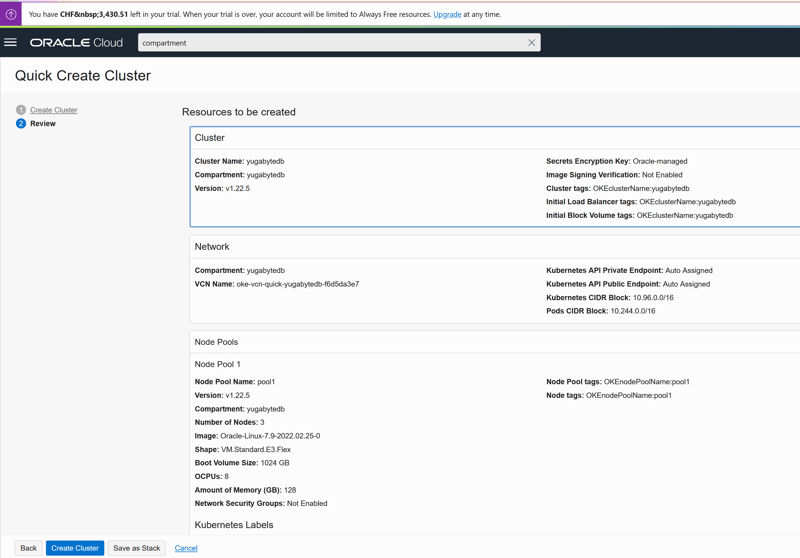

I didn't specify any custom tags but OKEclusterName: yugabytedb will be added automatically to the cluster, load balancers, block volumes, node pool, and nodes. With this and the compartment I'll be able to clearly identify the costs.

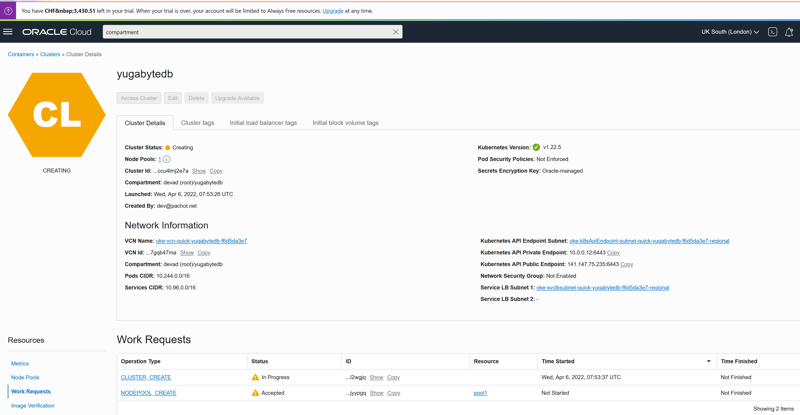

The Oracle Tags are CreatedOn: 2022-04-06T07:53:25.895Z for the cluster, CreatedOn: 2022-04-06T07:53:27.662Z for the cluster, and CreatedOn: 2022-04-06T07:53:27.662Z for the node pools.

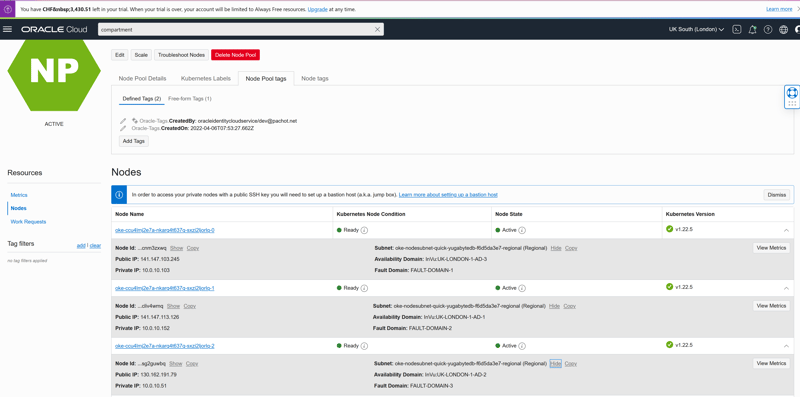

It is important to understand that I have a worker in each Availability Zone:

This is important for High Availability, and also to check the cost of this configuration.

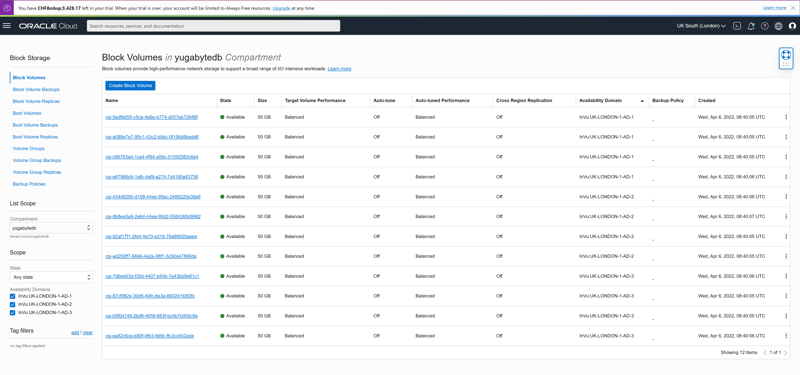

It is also important to verify that the block volumes are also in each Availability Domain:

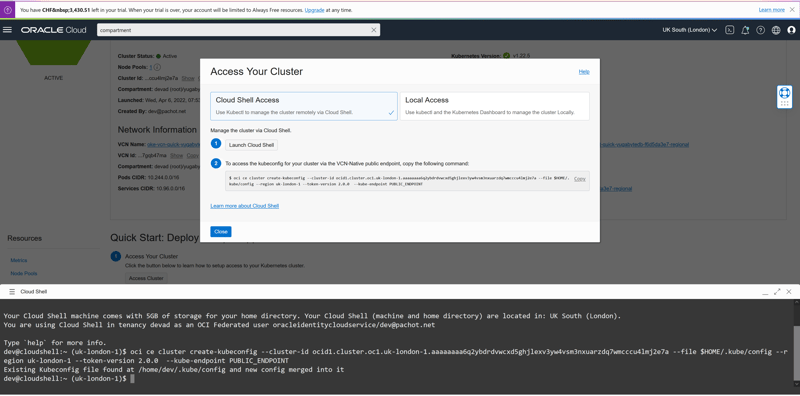

Now, ensuring that I can run kubectlcommands from the cloud shell. The instructions are found in "Quick Start":

I'll continue mostly in command line from the cloud shell:

dev@cloudshell:~ (uk-london-1)$

oci ce cluster create-kubeconfig --cluster-id ocid1.cluster.oc1.uk-london-1.aaaaaaaa6q2ybdrdvwcxd5ghjlexv3yw4vsm3nxuarzdq7wmcccu4lmj2e7a \

--file $HOME/.kube/config --region uk-london-1 \

--token-version 2.0.0 --kube-endpoint PUBLIC_ENDPOINT

Existing Kubeconfig file found at /home/dev/.kube/config and new config merged into it

I can see my 3 nodes:

dev@cloudshell:~ (uk-london-1)$

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

10.0.10.103 Ready node 11m v1.22.5 10.0.10.103 141.147.103.245 Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7

10.0.10.152 Ready node 10m v1.22.5 10.0.10.152 141.147.113.126 Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7

10.0.10.51 Ready node 11m v1.22.5 10.0.10.51 130.162.191.79 Oracle Linux Server 7.9 5.4.17-2136.304.4.1.el7uek.x86_64 cri-o://1.22.3-1.ci.el7

And the system pods:

dev@cloudshell:~ (uk-london-1)$

kubectl get pods -A-o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7bb8797d57-kw8v5 1/1 Running 0 15m 10.244.0.130 10.0.10.103 <none> <none>

kube-system coredns-7bb8797d57-smvpx 1/1 Running 0 19m 10.244.0.3 10.0.10.51 <none> <none>

kube-system coredns-7bb8797d57-zt86b 1/1 Running 0 15m 10.244.1.2 10.0.10.152 <none> <none>

kube-system csi-oci-node-84pnz 1/1 Running 0 16m 10.0.10.51 10.0.10.51 <none> <none>

kube-system csi-oci-node-js6k7 1/1 Running 1 (15m ago) 16m 10.0.10.152 10.0.10.152 <none> <none>

kube-system csi-oci-node-lqbg6 1/1 Running 0 16m 10.0.10.103 10.0.10.103 <none> <none>

kube-system kube-dns-autoscaler-5cd75c9b4c-968vm 1/1 Running 0 19m 10.244.0.2 10.0.10.51 <none> <none>

kube-system kube-flannel-ds-2lbsf 1/1 Running 1 (15m ago) 16m 10.0.10.103 10.0.10.103 <none> <none>

kube-system kube-flannel-ds-dtg8x 1/1 Running 1 (15m ago) 16m 10.0.10.51 10.0.10.51 <none> <none>

kube-system kube-flannel-ds-rvvbc 1/1 Running 1 (15m ago) 16m 10.0.10.152 10.0.10.152 <none> <none>

kube-system kube-proxy-h8qmf 1/1 Running 0 16m 10.0.10.152 10.0.10.152 <none> <none>

kube-system kube-proxy-kq8gr 1/1 Running 0 16m 10.0.10.51 10.0.10.51 <none> <none>

kube-system kube-proxy-wm9zr 1/1 Running 0 16m 10.0.10.103 10.0.10.103 <none> <none>

kube-system proxymux-client-2p5qw 1/1 Running 0 16m 10.0.10.51 10.0.10.51 <none> <none>

kube-system proxymux-client-46s88 1/1 Running 0 16m 10.0.10.152 10.0.10.152 <none> <none>

kube-system proxymux-client-rlpmm 1/1 Running 0 16m 10.0.10.103 10.0.10.103 <none> <none>

I have my Oracle Cloud Kubernetes cluster. In the next post, I'll install YugabyteDB there with a helm chart.