Since the original launch of Pieces for Developers, we’ve been laser-focused on developer productivity. We started by giving devs a place to store their most valuable snippets of code, then moved on to proactively saving and contextualizing them. Next, we built one of the first on-device LLM-powered AI copilots in the Pieces Copilot. Now, we’re introducing Pieces Copilot+.

After 10 months of hard work, dedication, and technical breakthroughs, we're finally achieving our vision of helping developers remember anything and interact with everything through a Universal Workstream Copilot deeply integrated across macOS, Linux, and Windows.

- Tsavo Knott, CEO and Technical Co-Founder of Pieces for Developers

We’re taking our obsession with contextual developer productivity to the next level with the launch of Live Context within our copilot, making it the world’s first temporally grounded copilot. Now available in public alpha, Live Context elevates the Pieces Copilot and allows it to understand your workflow and discuss how, where, and when you work. As with everything we do here at Pieces, this all happens entirely on-device and is available on macOS, Windows, and Linux. We truly believe the Pieces Copilot+ will revolutionize how you cross off your to-dos.

Let Us Help You 10x Your Productivity

You’ve got a lot going on. You’re researching in the browser, you’re deep in discussions with your colleagues in Slack, Google Chat, or Microsoft Teams, and you (finally) get to do some coding in your IDE. Then you get up for lunch, return to your workspace and completely forget what you were doing. Maybe you look at an error and think to yourself, “I swear I saw an explanation for that exact error somewhere last week…”

We get it, and more importantly, the Pieces Copilot+ gets it. By using context gathered throughout your workflow, Pieces Copilot+ can provide hyper-aware assistance to guide you right back to where you left off. Ask it, “What was I working on an hour ago?” and let it help you get back into flow. Ask it, “How can I resolve the issue I got with Cocoa Pods in the terminal in IntelliJ?” or “What did Mack say I should test in the latest release?” and let Pieces Copilot surface the information that you know you have, but you can’t remember where.

Pieces Copilot+ is now temporally grounded in exactly what you have been working on, which reduces the need for manual context input, enhances continuity in developer tasks, reduces context switching, and enables more natural, intuitive interactions with the Pieces Copilot.

Using Live Context in our Workstream

At Pieces for Developers, we love using our own product (just like you, we’re always trying to be more effective). Here are a few real prompts and use cases gathered from the team:

Workflow Assistance

- What task should I do next?

- What was I talking to Mark about earlier in Google Chat?

- Based on the given workflow context, have I performed all the necessary release chores in order to release our plugins for VS Code, JetBrains, Obsidian, and JupyterLab? In general, I need to bump their versions, update the changelogs, and make sure to support the latest version of the copilot package.

Research

- Review a new package or new API and ask, "How can I use the Lua SDK to call the version endpoint?"

- Review a support forum that had a specific solution to a difficult problem and ask the Pieces Copilot to apply it to the problem I am trying to solve.

- Review a research paper and have the Pieces Copilot summarize it for me.

Coding

- “How can I resolve the issue I'm experiencing in the

handleQGPTStreamEventfunction in IntelliJ?” - “Implement the

handleQGPTStreamEventfunction according to the comment about the function definition.” - “How should I adjust the

initCopilotfunction given the global state implementation details outlined in Obsidian?” - “How can I adjust the

incrementalRenderfunction to perform better when the difference between original and updated is very large?”

How It Works

If this seems like sorcery, don’t worry - we’ll reveal the man behind the curtain. The live context comes from the Workstream Pattern Engine, which shadows your workflow on an operating system level. Whether you're on macOS, Windows, or Linux, your workflow will be captured, processed, and stored on-device for access at a later date. The Workstream Pattern Engine applies several on-device and real-time algorithms to this data to proactively capture the most important information as you go about your workday, wherever you are— your browser, your IDE, or your collaboration tools. Over time, the Workstream Pattern Engine learns more and more about your work and becomes increasingly helpful. It even takes context from your previous copilot chats to build on the knowledge you are gathering.

Getting Started with Live Context in Pieces Copilot+

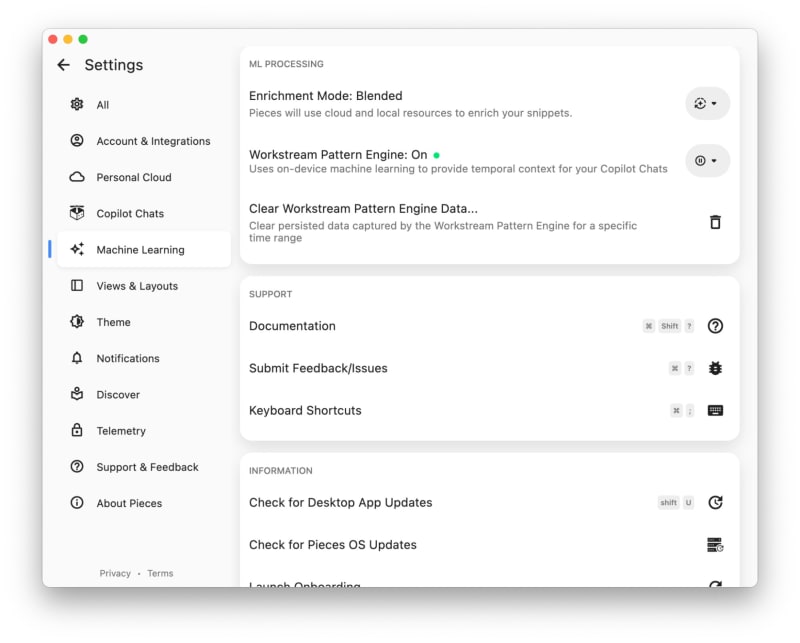

To start chatting about your day, you’ll first need to enable the Workstream Pattern Engine. You can disable it at any time, but remember that the Copilot will not be able to use workstream context from when you had it disabled.

To enable or disable the engine, head to the Machine Learning section of the Settings page of the Pieces Desktop App. You’ll see a button to enable the Workstream Pattern Engine— hit it, and you’re good to go!

If this is your first time enabling the Workstream Pattern Engine, you’ll need to update some of the permissions allowed to Pieces for Developers. (Note: Windows users don’t need to do this!) Just follow the prompts in the Pieces Desktop App, and you’ll be up and running in no time.

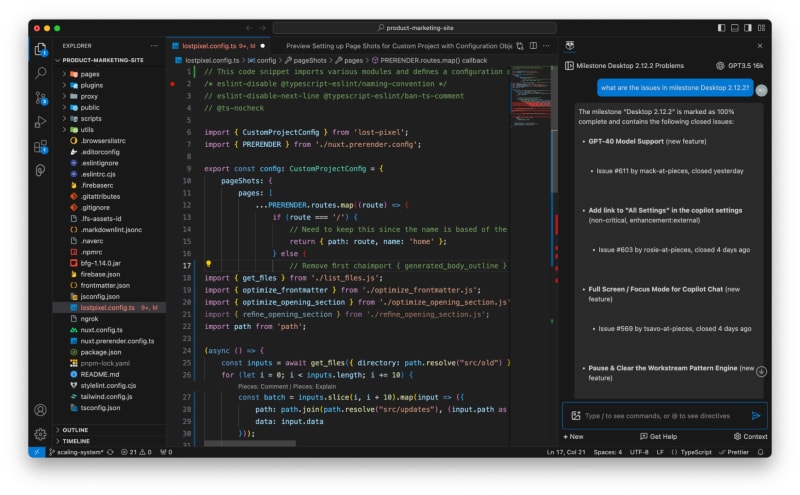

It’s time to start using the temporally grounded Pieces Copilot! Go about your usual work for a few minutes, and then start a new Pieces Copilot conversation with Live Context toggled on. Like before, you can leverage any of our available models, on-device or cloud, to engage with live context in the Pieces Copilot.

Of course, you can use it across your toolchain to carry on conversations in your favorite IDE and browser.

Using Live Context in Your Work

We already gave you some of our own favorite prompts, but let’s talk about some of the use cases for how Pieces Copilot+ with Live Context can streamline your workflow. Some good ones we’ve heard from our Early Access Program users include quickly summarizing large volumes of documentation after a simple scroll through a site, presenting only the most important themes from a list of unread conversations, or adding context for the PR they’re reviewing.

Similarly, our team often uses temporal copilot conversations to generate talking points in a daily standup. When Pieces knows what you’ve been working on, it’s easy to succinctly present your accomplishments from the last day. It might even be able to suggest what you should work on next, if you need help prioritizing your task list.

In a more complex use case, the Workstream Pattern Engine can assist in project hand-offs. When transitioning projects between teams or team members, it can generate comprehensive reports on the project’s status, key issues, and recent changes, simplifying the hand-off process. As you might imagine, this is also insanely useful when onboarding a new team member. You can ask Pieces Copilot+ to generate an onboarding document for a specific project, and you’ll get a ready-made document of repo documentation, collaborators, project background, and more.

Similarly, leveraging the Workstream Pattern Engine has helped our team to cut down on time analyzing errors. You no longer have to paste a stack trace or error message into a copilot chat— it just knows. You can directly ask about the error, get some help, and move on without opening a new browser tab.

Pieces now deeply understands what you're currently working on and who you've been working with, along with a holistic understanding of related research in the browser or referencing/refactoring/writing code in your IDE. So, you no longer need to figure out who to talk to or what relevant documentation can help! Simply ask Pieces “Who can help me out with this error?” or “Which open-source packages can help me implement this algorithm?”, get a response back, and be on your way.

As you get started with the world’s first temporally grounded copilot, try out one of these use cases and then discover your own! There are endless ways that the Workstream Pattern Engine and Pieces Copilot can help you work more efficiently.

Data Security and Privacy

Let’s get to the important stuff: Your workstream data is captured and stored locally on-device. At no point will anyone, including the Pieces team, have access to this data unless you choose to share it with us.

The Workstream Pattern Engine triangulates and leverages on-task and technical context across the developer-specific tools you're actively using. The bulk of the processing that occurs within the Workstream Pattern Engine is filtering, which utilizes our on-device machine learning engines to ignore sensitive information and secrets. This enables the highest levels of performance, security, and privacy.

For some advanced components within the Workstream Pattern Engine, blended processing is recommended as you may need to leverage a cloud-powered Large Language Model as your copilot’s runtime. You can leverage Local Large Language Models, but this may reduce the fidelity of output and requires a fairly new machine (2021 and newer) and, ideally, a dedicated GPU. You can read this blog for more information about running local models on your machine.

As always, we've built Pieces from the ground up to put you in control. The Workstream Pattern Engine may be paused and resumed at any time from the Pieces Desktop App settings menu.

Conclusion

Are you ready to try the Workstream Pattern Engine for yourself? Download the latest version of the Pieces Suite today so that you can cut way back on context-switching, fragmented workflows, and awkward copilot conversations that lack the context you need.

As one of our Pieces Copilot+ users, we need you to help us improve and shape the future of the Workstream Pattern Engine! Please join our Discord to share your feedback, or reach out to our team to schedule a one-on-one feedback session with us. For more information, check out our documentation. Happy coding!