Hi!

Today I’ll try to expand a little the scenario described in this Semantic Kernel blog post: “Azure OpenAI On Your Data with Semantic Kernel“.

The code below uses an GPT-4o model to support the chat and also is connected to Azure AI Search using SK. While runing this demo, you will notice the mentions to [doc1], [doc2] and more. Extending the original SK blog post, this sample shows at the bottom the details of each one of the mentioned documents.

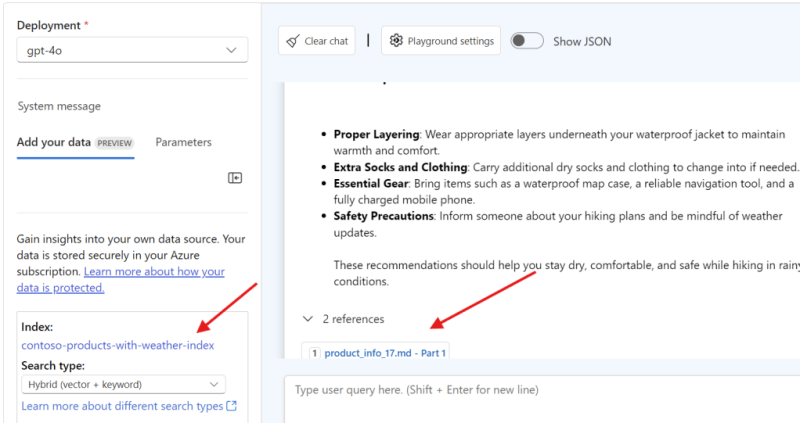

A similar question using Azure AI Studio, will also shows the references to source documents.

Semantic Kernel Blog Post

The SK team explored how to leverage Azure OpenAI Service in conjunction with the Semantic Kernel to enhance AI solutions. By combining these tools, you can harness the capabilities of large language models to work effectively with data, using Azure AI Search capabilities. The post covered the integration process, highlighted the benefits, and provided a high-level overview of the architecture.

The post showcases the importance of context-aware responses and how the Semantic Kernel can manage state and memory to deliver more accurate and relevant results. This integration between SK and Azure AI Search empowers developers to build applications that understand and respond to user queries in a more human-like manner.

The blog post provides a code sample showcasing the integration steps. To run the scenario, you’ll need:

- Upload our data files to Azure Blob storage.

- Vectorize and index data in Azure AI Search.

- Connect Azure OpenAI service with Azure AI Search.

For more in-depth guidance, be sure to check out the full post here.

Code Sample

And now it’s time to who how we can access the details of the response from a SK call, when the response includes information from Azure AI Search.

Let’s take a look at the following program.cs to understand its structure and functionality.

- The sample program is a showcase of how to utilize Azure OpenAI and Semantic Kernel to create a chat application capable of generating suggestions based on user queries.

- The program starts by importing necessary namespaces, ensuring access to Azure OpenAI, configuration management, and Semantic Kernel functionalities.

- Next, the program uses a configuration builder to securely load Azure OpenAI keys from user secrets.

- The core of the program lies in setting up a chat completion service with Semantic Kernel. This service is configured to use Azure OpenAI for generating chat responses, utilizing the previously loaded API keys and endpoints.

- To handle the conversation, the program creates a sample chat history. This history includes both system and user messages, forming the basis for the chat completion service to generate responses.

- An Azure Search extension is configured to enrich the chat responses with relevant information. This extension uses an Azure Search index to pull in data, enhancing the chat service’s ability to provide informative and contextually relevant responses.

- Finally, the program runs the chat prompt, using the chat history and the Azure Search extension configuration to generate a response.

- This response is then printed to the console. Additionally, if the response includes citations from the Azure Search extension, these are also processed and printed, showcasing the integration’s ability to provide detailed and informative answers.

Happy coding!

Greetings

El Bruno

More posts in my blog ElBruno.com.