The Apache Spark connector for Azure SQL Database (and SQL Server) enables these databases to be used as input data sources and output data sinks for Apache Spark jobs. You can use the connector in Azure Synapse Analytics for big data analytics on real-time transactional data and to persist results for ad-hoc queries or reporting.

At the time of writing, there is no linked service or AAD pass-through support with the Azure SQL connector via Azure Synapse Analytics. But you can use other options such as Azure Active Directory authentication or via direct SQL authentication (username and password based). A secure way of doing this is to store the Azure SQL Database credentials in Azure Key Vault (as Secret) — this is what’s covered in this short blog post.

Assuming you have an Azure Synapse Workspace and Azure SQL Database already created, all you need to is:

- Create an Azure Key Vault and add a Secret to store the Azure SQL Database connectivity info.

- Create a Linked Service for your Azure Key Vault in Azure Synapse Workspace.

- Provide appropriate permissions to Azure Synapse workspace managed service identity to Azure Key Vault

Here is a walk through of the process

Create an Azure Key Vault and add a Secret.

I have stored the entire JDBC connection string in this case but you can choose to just store the password as well.

To retrieve secrets from Azure Key Vault, the recommended way is to create a Linked Service to your Azure Key Vault. Also, make sure that the Synapse workspace managed service identity (MSI) has Secret Get privileges on your Azure Key Vault. This will let Synapse authenticate to Azure Key Vault using the Synapse workspace managed service identity.

You can also authenticate using your user Azure Active Directory credential.

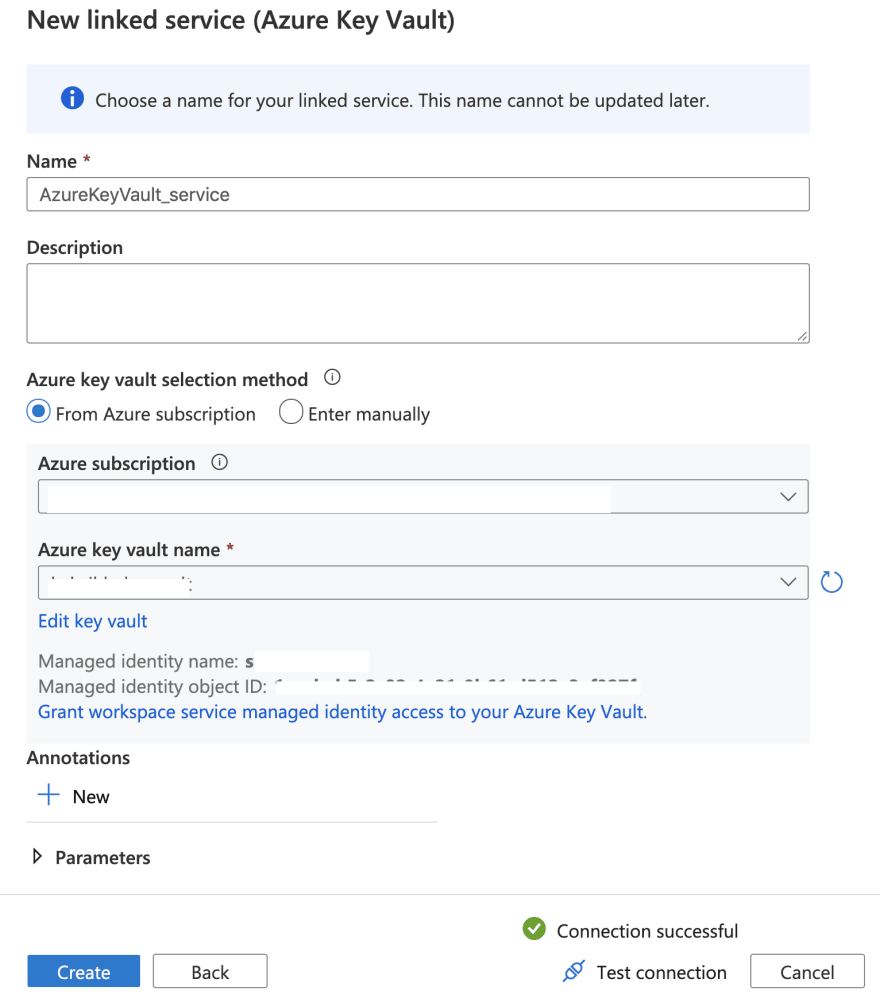

Create a Linked Service in Azure Synapse Workspace:

Grant appropriate access for Azure Synapse workspace service managed identity to your Azure Key Vault:

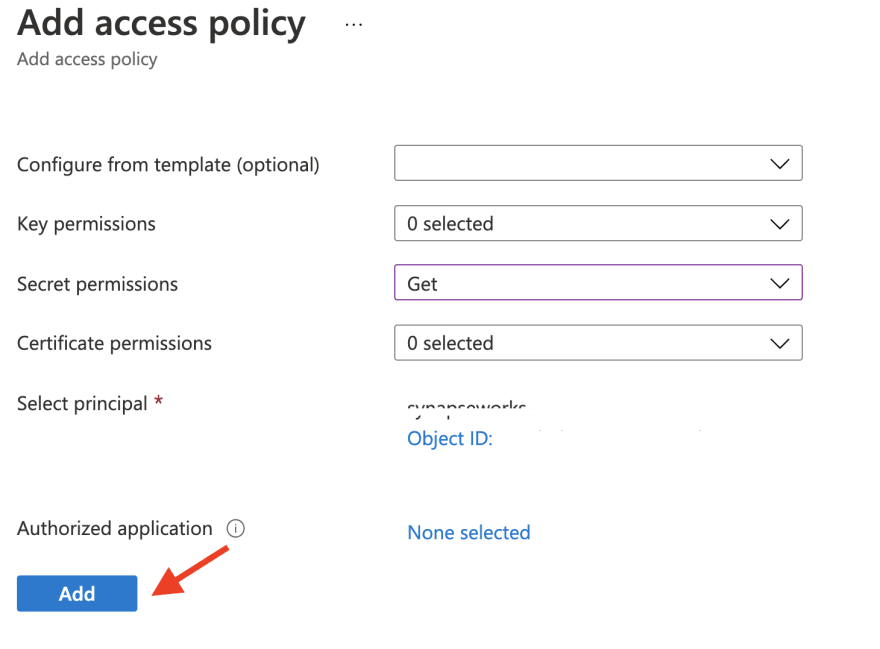

Choose Get permission on Secret:

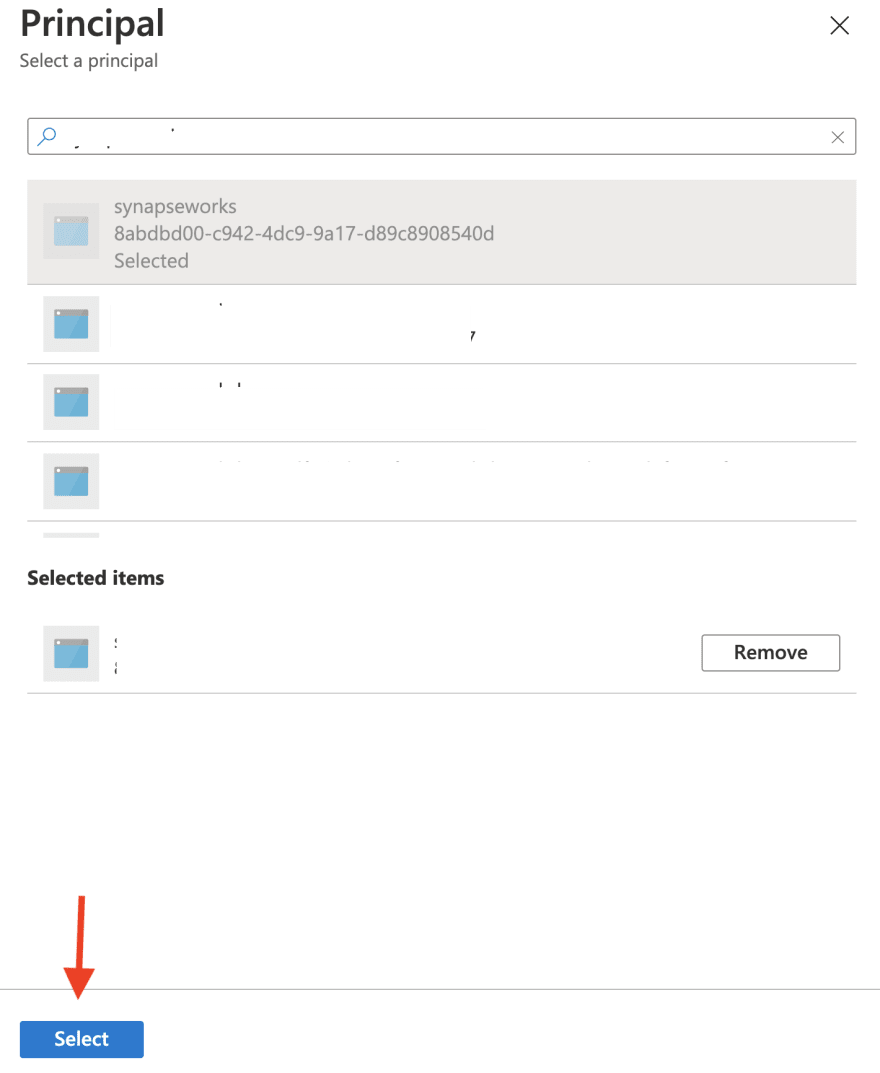

Search for the Synapse Workspace Managed Service Identity — it’s the same name as that of the workspace

Add the policy:

Click Save to confirm:

Let’s see how to use this…

I will be using

pysparkin Synapse Spark pools as an example.

Synapse uses Azure Active Directory (AAD) passthrough by default for authentication between resources. If you need to connect to a resource using other credentials, use the TokenLibrary directly — this simplifies the process of retrieving SAS tokens, AAD tokens, connection strings, and secrets stored in a linked service or from an Azure Key Vault.

To retrieve a secret stored from Azure Key Vault, use the TokenLibrary.getSecret() function. Here is a python example but same applies to C# or Scala.

For example, to access data from SalesLT.Customer table (part of AdventureWorks sample database), you can use the following:

url = TokenLibrary.getSecret("<Azure Key Vault name>", "<Secret name>", "<Linked Service name>")

dbtable = "SalesLT.Customer"

customers = spark.read \

.format("com.microsoft.sqlserver.jdbc.spark") \

.option("url", url) \

.option("dbtable", dbtable) \

.load()

print(customers.count())

customers.show(5)

That’s all there is to it!