Rag Architecture: A Comprehensive Guide for Efficient Machine Learning

This article dives deep into Rag architecture, a powerful approach to building efficient and scalable machine learning models. We will cover everything from its core concepts to practical applications, including real-world use cases and step-by-step guides.

1. Introduction

1.1 What is Rag Architecture?

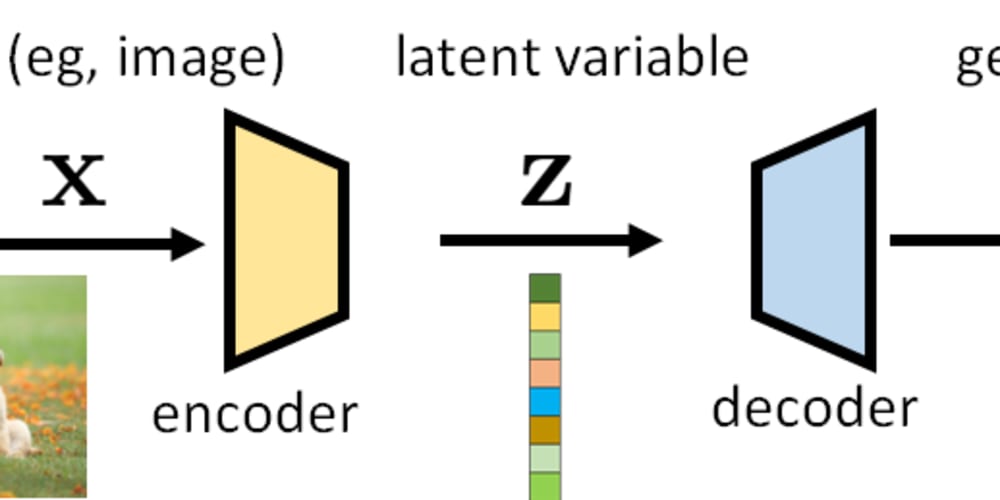

Rag architecture, short for Retrieval-Augmented Generation, is a revolutionary approach in natural language processing (NLP) that leverages the strengths of both retrieval and generation models to create a more comprehensive and efficient system. It's essentially a combination of two key components:

- Retrieval Model: This model seeks to find relevant information from a large database of text, typically known as a knowledge base. It acts as a "memory" for the system, providing context and factual information.

- Generation Model: This model is responsible for generating text based on the input and the retrieved information. It often takes the form of a language model trained to produce coherent and contextually relevant text.

1.2 Historical Context and Evolution

The idea of combining retrieval and generation techniques in NLP has been around for a while. Early research focused on using simple retrieval methods like keyword matching alongside generation models. However, recent advancements in deep learning, particularly the emergence of powerful transformer-based architectures, have enabled a more sophisticated integration of these two approaches.

1.3 Problem Solved and Opportunities Created

Rag architecture tackles the limitations of traditional generation models, which often struggle with:

- Factual accuracy: Generation models can sometimes produce inaccurate or hallucinated information, especially when dealing with complex domains or factual topics.

- Limited context: Relying solely on the input text can limit the model's ability to access and utilize relevant background knowledge.

- Scalability: Generating large amounts of text can be computationally expensive, and handling vast knowledge bases poses challenges for traditional generation models.

Rag architecture addresses these limitations by introducing a retrieval component that:

- Improves accuracy: By grounding the generation process in factual information from a knowledge base, Rag models can produce more accurate and reliable text.

- Expands context: The retrieval component provides access to a wider range of context, enhancing the model's understanding and improving the generated output.

- Increases scalability: Retrieving relevant information from a knowledge base can be significantly more efficient than generating all the necessary information from scratch.

2. Key Concepts, Techniques, and Tools

2.1 Key Concepts

- Knowledge Base: This is a large repository of text data that acts as the source of information for the retrieval model. It can include documents, articles, books, code, or any other type of text data relevant to the task.

- Retrieval Model: This model takes the input and searches the knowledge base for relevant information. It leverages techniques like dense retrieval, where text is encoded into numerical vectors and similarity is calculated based on these vectors.

- Generation Model: This model takes the retrieved information and the original input and generates the final text output. It often uses a transformer-based language model like BERT or GPT-3.

- Fusion: This refers to the process of combining the retrieved information with the input text to feed it to the generation model. It can involve techniques like attention mechanisms to highlight relevant parts of the retrieved information.

2.2 Tools and Libraries

- Transformers library: This popular library offers pre-trained models like BERT and GPT-3, which are commonly used as generation models in Rag architectures.

- Faiss: This library is designed for efficient similarity search and is often used for dense retrieval.

- Elasticsearch: This is a search engine that can be used to index and search the knowledge base.

- Sentence Transformers: This library provides pre-trained models specifically designed for encoding text into meaningful vectors suitable for dense retrieval.

2.3 Current Trends and Emerging Technologies

- Knowledge Graph Integration: Integrating knowledge graphs, which represent relationships between entities, into the retrieval process can enhance the model's ability to understand complex concepts and relationships.

- Multi-Modal Retrieval: This involves retrieving information from various modalities like text, images, and audio, further enriching the context and enabling more nuanced responses.

- Explainability and Interpretability: Researchers are actively working on making Rag architectures more transparent and interpretable, enabling users to understand how the model arrives at its outputs.

2.4 Industry Standards and Best Practices

- Data Quality: Maintaining a high-quality and diverse knowledge base is crucial for the effectiveness of Rag architecture.

- Model Selection: Choosing appropriate retrieval and generation models based on the specific task and data is important.

- Evaluation Metrics: Evaluating Rag architectures requires specific metrics beyond traditional NLP metrics to account for factual accuracy and retrieval performance.

3. Practical Use Cases and Benefits

3.1 Real-World Applications

- Question Answering: Rag architectures excel at answering complex questions by retrieving relevant information from a knowledge base and using it to generate a comprehensive and informative response.

- Dialogue Systems: Rag models can be used to create more engaging and informative conversational AI agents that can draw on external knowledge to answer questions and provide insightful responses.

- Content Generation: By leveraging a knowledge base, Rag architectures can assist in generating high-quality and factually accurate content for various purposes, such as writing news articles, product descriptions, or scientific reports.

- Code Generation: Rag architecture can be used to generate code snippets or complete programs by retrieving relevant code from a repository and using it to guide the code generation process.

3.2 Advantages and Benefits

- Enhanced Accuracy: The retrieval component helps ensure the generated text is grounded in factual information, leading to higher accuracy and reliability.

- Improved Context: Rag models can access and utilize a wider range of context from the knowledge base, resulting in more comprehensive and nuanced outputs.

- Increased Scalability: By leveraging retrieval techniques, Rag architectures can handle large amounts of information and generate text efficiently, making them suitable for tasks involving extensive knowledge bases.

- Greater Flexibility: Rag models can be adapted to various domains and tasks by changing the knowledge base and fine-tuning the generation model.

3.3 Industries and Sectors

Rag architecture can benefit a wide range of industries and sectors, including:

- Education: Building intelligent tutoring systems and providing personalized learning experiences.

- Healthcare: Creating AI-powered diagnostics and treatment recommendations based on medical literature and patient data.

- Finance: Developing financial analysis tools that leverage market data and economic trends.

- Legal: Assisting with legal research and document analysis by retrieving relevant case law and legal precedents.

- Customer Service: Enhancing chatbot experiences by providing accurate and relevant information to customers.

4. Step-by-Step Guide and Examples

This section provides a simplified example of building a Rag architecture for a question answering task using the Transformers library and Elasticsearch for retrieval.

Step 1: Setting up the Environment

Install the required libraries:

pip install transformers elasticsearch sentence-transformers

Step 2: Preparing the Knowledge Base

Create a directory for your knowledge base and add the relevant text documents. In this example, we use a set of Wikipedia articles.

Step 3: Indexing the Knowledge Base

Use Elasticsearch to index the knowledge base documents:

from elasticsearch import Elasticsearch

from sentence_transformers import SentenceTransformer

es = Elasticsearch()

# Load a pre-trained Sentence Transformer model

model = SentenceTransformer('all-mpnet-base-v2')

# Iterate over the knowledge base documents

for filename in os.listdir(knowledge_base_path):

with open(os.path.join(knowledge_base_path, filename), 'r') as file:

text = file.read()

# Encode the text into a vector

embedding = model.encode(text)

# Index the document in Elasticsearch

es.index(index='knowledge_base', id=filename, body={'embedding': embedding.tolist()})

Step 4: Building the Retrieval Model

Use Sentence Transformers to encode the question into a vector:

question = "What is the capital of France?"

question_embedding = model.encode(question)

Use Elasticsearch to retrieve the most similar documents from the knowledge base:

results = es.search(

index='knowledge_base',

body={

'query': {

'script_score': {

'query': {'match_all': {}},

'script': {

'source': 'cosineSimilarity(params.query_vector, doc["embedding"])',

'params': {'query_vector': question_embedding.tolist()}

}

}

}

}

)

Step 5: Building the Generation Model

Load a pre-trained transformer-based language model like BART or T5:

from transformers import BartForConditionalGeneration, BartTokenizer

model = BartForConditionalGeneration.from_pretrained('facebook/bart-large-cnn')

tokenizer = BartTokenizer.from_pretrained('facebook/bart-large-cnn')

Step 6: Combining Retrieval and Generation

Extract the most relevant document from the retrieved results:

top_document = results['hits']['hits'][0]['_source']

# Combine the retrieved document with the original question

context = f"Question: {question} \n\n Document: {top_document}"

# Encode the combined context

inputs = tokenizer(context, return_tensors='pt', padding='max_length', truncation=True)

# Generate the answer using the generation model

output = model.generate(**inputs)

# Decode the generated answer

answer = tokenizer.decode(output[0], skip_special_tokens=True)

print(answer)

This example demonstrates a simple question answering system built with Rag architecture. It combines retrieval and generation to provide accurate and informative answers based on a knowledge base of Wikipedia articles.

5. Challenges and Limitations

5.1 Challenges

- Knowledge Base Quality: The accuracy and effectiveness of Rag architecture heavily depend on the quality and relevance of the knowledge base. Maintaining and updating a large and diverse knowledge base can be challenging.

- Retrieval Efficiency: Retrieving relevant information from large knowledge bases can be computationally expensive, especially for dense retrieval methods. Efficient indexing and search techniques are crucial.

- Fusion Complexity: Combining the retrieved information with the input text in a meaningful and informative way requires careful consideration of fusion techniques and attention mechanisms.

- Model Interpretability: Understanding how Rag architectures arrive at their outputs can be challenging, particularly when dealing with complex interactions between retrieval and generation components.

5.2 Limitations

- Bias and Fairness: Rag architectures inherit biases from the data used to train both the retrieval and generation models, potentially leading to unfair or discriminatory outputs.

- Data Privacy: Using knowledge bases containing sensitive information requires careful consideration of data privacy and security issues.

- Limited Creativity: Rag architectures are primarily focused on retrieving and re-formulating existing information, which may limit their ability to generate truly novel and creative text.

5.3 Mitigation Strategies

- Knowledge Base Management: Use data cleaning and enrichment techniques to ensure high-quality and relevant content in the knowledge base.

- Efficient Retrieval Techniques: Explore advanced retrieval methods like sparse retrieval and hybrid approaches to enhance efficiency.

- Attention-Based Fusion: Utilize attention mechanisms to highlight relevant parts of the retrieved information and guide the generation process.

- Explainability Methods: Employ techniques like saliency maps or attention visualization to understand the model's reasoning and decision-making process.

6. Comparison with Alternatives

6.1 Traditional Generation Models

- Pros: Can generate creative text and handle complex language patterns.

- Cons: Prone to factual inaccuracies, limited context, and scalability issues.

6.2 Knowledge-Based Systems

- Pros: Provide factual accuracy and access to a vast amount of information.

- Cons: Limited flexibility, difficulty in generating complex or creative text.

6.3 When to Use Rag Architecture

Rag architecture is a suitable choice when:

- Factual accuracy is crucial: For tasks requiring reliable and verifiable information.

- Context is important: When the model needs to access and utilize a wide range of information.

- Scalability is a concern: When dealing with large knowledge bases and generating large amounts of text.

- Flexibility is required: To adapt the model to different domains and tasks.

7. Conclusion

Rag architecture presents a promising approach to developing efficient and powerful machine learning models that leverage the strengths of both retrieval and generation techniques. It addresses several limitations of traditional generation models, leading to improved accuracy, context, and scalability. While challenges and limitations exist, ongoing research and technological advancements are continuously improving the capabilities and applicability of Rag architecture.

7.1 Key Takeaways

- Rag architecture combines retrieval and generation techniques to produce accurate and contextualized outputs.

- It excels at tasks involving large knowledge bases and requires factual accuracy.

- Challenges include knowledge base quality, retrieval efficiency, and model interpretability.

7.2 Further Learning

- Research Papers: Explore recent research papers on Rag architecture and its applications.

- Code Repositories: Experiment with open-source implementations of Rag architecture using libraries like Transformers and Faiss.

- Online Courses: Take online courses or tutorials on Rag architecture and its concepts.

7.3 Future of Rag Architecture

The future of Rag architecture looks promising, with ongoing advancements in:

- Knowledge Graph Integration: Enhancing the understanding of relationships and complex concepts.

- Multi-Modal Retrieval: Expanding the context beyond text to include other modalities like images and audio.

- Explainability and Interpretability: Making Rag models more transparent and understandable to users.

8. Call to Action

- Try it out: Implement a simple Rag architecture for a question answering task using the steps outlined in this article.

- Explore further: Delve deeper into the research and applications of Rag architecture to discover its potential in your field.

- Share your insights: Discuss your experiences and insights on Rag architecture with the NLP community.